Documenting microdata

Defining microdata

When surveys or censuses are conducted, or when administrative data are recorded, information is collected on each unit of observation. The unit of observation can be a person, a household, a firm, an agricultural holding, a facility, or other. Microdata are the data files resulting from these data collection activities. They contain the unit-level information (as opposed to aggregated data in the form of counts, means, or other). Each row in a microdata file is referred to as an observation. Information on each unit of observation is stored in variables, which can be of different types (e.g. numeric or character variables, with discrete or continuous values). These variables may contain data reported by the respondent (e.g., the marital status of a person), obtained by observation or measurement (e.g., the GPS location of a dwelling or other sensor), or generated by calculation, recoding or derivation (e.g., the sample weight in a survey).

For efficiency reasons, categorical variables are usually stored in numeric format (i.e. coded values). For example, the sex of a respondent may be stored in a variable named ‘Q_01’, and include values 1, 2, and 9 where 1 represents “Male”, 2 represents “Female”, and 9 represents “Unreported”. Microdata must therefore be provided with a data dictionary (i.e., structural metadata) containing the variables and value labels and, for the derived variables (if any), some information of the derivation process.

Many other features (descriptive and reference metadata) of a micro-dataset should also be described such as the objectives and the methodology of data collection, a description of the sampling design for sample surveys, the period of data collection, the identification of the primary investigator and other contributors, the scope and geographic coverage of the data, and much more. This information is essential to make the microdata usable and discoverable.

Metadata standard: the DDI Codebook

The Data Documentation Initiative (DDI) metadata standard provides a structured and comprehensive list of metadata elements and attributes for the documentation of microdata. The DDI originated in the Inter-university Consortium for Political and Social Research (ICPSR), a membership-based organization with more than 500 member colleges and universities worldwide. The DDI is now the project of an alliance of North American and European institutions. Member institutions comprise many of the largest data producers and data archives in the world. The DDI standard is published under the terms of the [GNU General Public License]((http://www.gnu.org/licenses) (version 3 or later).

The DDI standard is used by a large community of data archivists, including data libraries from the academia and research centers, national statistical agencies and other official data producing agencies, and international organizations. The DDI standard has two branches: the DDI-Codebook (version 2.x) and the DDI LifeCycle (version 3.x). These two branches serve different purposes and audiences.

The Metadata Editor implements the DDI-Codebook. Internally, it uses a slightly simplified version of the DDI Codebook 2.5, to which a few elements are added. The DDI Alliance publishes the DDI-Codebook as an XML schema. The Metadata Editor uses a JSON implementation of the schema; the Metadata Editor however exports fully-compliant DDI Codebook 2.5 metadata in XML format (among other options).

The DDI Alliance developed the DDI-Codebook for organizing the content, presentation, transfer, and preservation of metadata in the social and behavioral sciences. It enables documenting microdata files in a simultaneously flexible and rigorous way. The DDI-Codebook aims to provide a straightforward means of recording and communicating all the salient characteristics of a micro-dataset. It is designed to encompass the kinds of data resulting from surveys, censuses, administrative records, experiments, direct observation and other systematic methodology for generating empirical measurements. The unit of observation can be individual persons, households, families, business establishments, transactions, countries or other subjects of scientific interest.

The technical description of the JSON schema used for the documentation of microdata is available at https://worldbank.github.io/metadata-schemas/#tag/Microdata.

Before you start

Before you start documenting a micro-dataset, it is highly recommended to carefully prepare the data and the related materials.

Prepare your data files

- Variable and value labels. Ensure that all variables and values are labeled in the data files (if the data are stored in Stata, SPSS, or another application that allows documentation of variables).

- Direct identifiers and confidential information. Drop the direct identifiers from the dataset (names, phone number of respondents, addresses, social security numbers, etc) and other confidential information if you plan to share the data.

- Unique identifiers and relationships. Check that all observations in each data file has a unique identifier, in the form of a specific variable or a combination of variables. The unique identifiers can vary across data files. Ensure that there are no duplicated identifiers in any data file. If your dataset is composed of multiple related data files, check that the files can be merged without any issue. For example, if you have distinct data files at the household and individual levels (i.e., if you have a hierarchical data structure), use a statistical package to verify that all households have at least one corresponding individual, and that each individual belongs to one and only one household.

- Missing values. It is preferable (but not required) to use system missing values (instead of values like '999') for indicating missing values. If missing values are indicated by values other than system missing, make sure you are aware of these values (which will have to be marked as representing missing values when documenting the data in the Metadata Editor.

- Temporary variables. Drop all temporary variables (variables that were created for testing or other purpose, but that do not need to be kept in the dataset) and other unnecessary variables from the data files.

- Weighting. For sample survey datasets, it is recommended to include the relevant sampling weight variables in all data files where they apply (for the convenience of data users).

- File names. It is recommended to name your data files (and all other files you want to share) using a consistent naming convention, and in a way that will make it easier for users to understand the content of the file.

- Data file formats. The Metadata Editor provides an option to read data files to automatically extract the metadata available in them. If necessary, export your data files to a format supported by the Metadata Editor.

Prepare the related materials to be included as external resources External resources are all the electronic files (documents, data files, scripts, or other) that you want to preserve or disseminate with the data. All these digital resources should be gathered, and saved preferably in open or standard format under user-friendly names. When documenting a survey or census dataset for example, ensure that you:

- Have a copy of the questionnaire(s) in electronic format. Include the file in both the original format and in a PDF version. If the survey was conducted using computer-assisted interviews using a software like Survey Solutions or CsPro, generate a PDF copy of the electronic form (Survey Solutions provide an otion to generate such a file).

- Collect an electronic copy of all other relevant documents, such interviewer manuals, technical documentation on sampling, survey technical and analytical reports, presentations of results, press releases, etc. For documents available in non-standard format, generate a PDF copy.

- Collect all other digital materials related to the dataset, such as data scripts, photos, videos, and others.

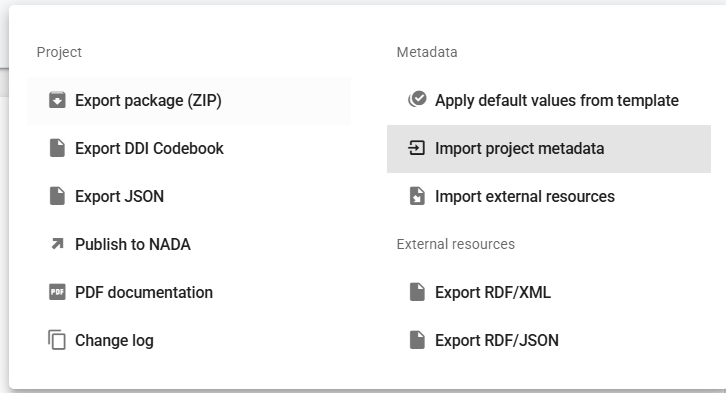

Create a new project

The first step in documenting a dataset is to create a new project. You do that by clicking on CREATE NEW PROJECT in the My projects page. Select Microdata as data type. This will open a new, untitled project Home page.

In the Templates frame, select the template you want to use to document the dataset. A default template is proposed; no action is needed if you want to use that template. Otherwise, switch to another template by clicking on the template name. Note that you can at any time change the template used for the documentation of a project. The selected template will determine what you see in the navigation tree and in the metadata entry pages.

Switching from one template to another will not impact the metadata that has already been entered; no information will be deleted from the metadata.

Once a project has been created, you can import the data files (if available) and start documenting the dataset.

Import and document the dataset

The DDI Codebook metadata standard used to document microdata has the following sections (containers), which will be found in any template designed based on that standard:

Document description. This section contains metadata on the metadata (to capture information on who documented the dataset, when, etc.)

Study description. This section contains cataloguing and referential metadata that describe the study (survey, census, administrative recording system), with information like the title, geographic and temporal coverage, producers and sponsors, and many more.

File description. This section provides a brief description of each data file.

Variable description. The section contains the documentation of each variable, with elements like variable name and label, value labels, literal questions and interviwer instructions, universe, summary statistics, and more.

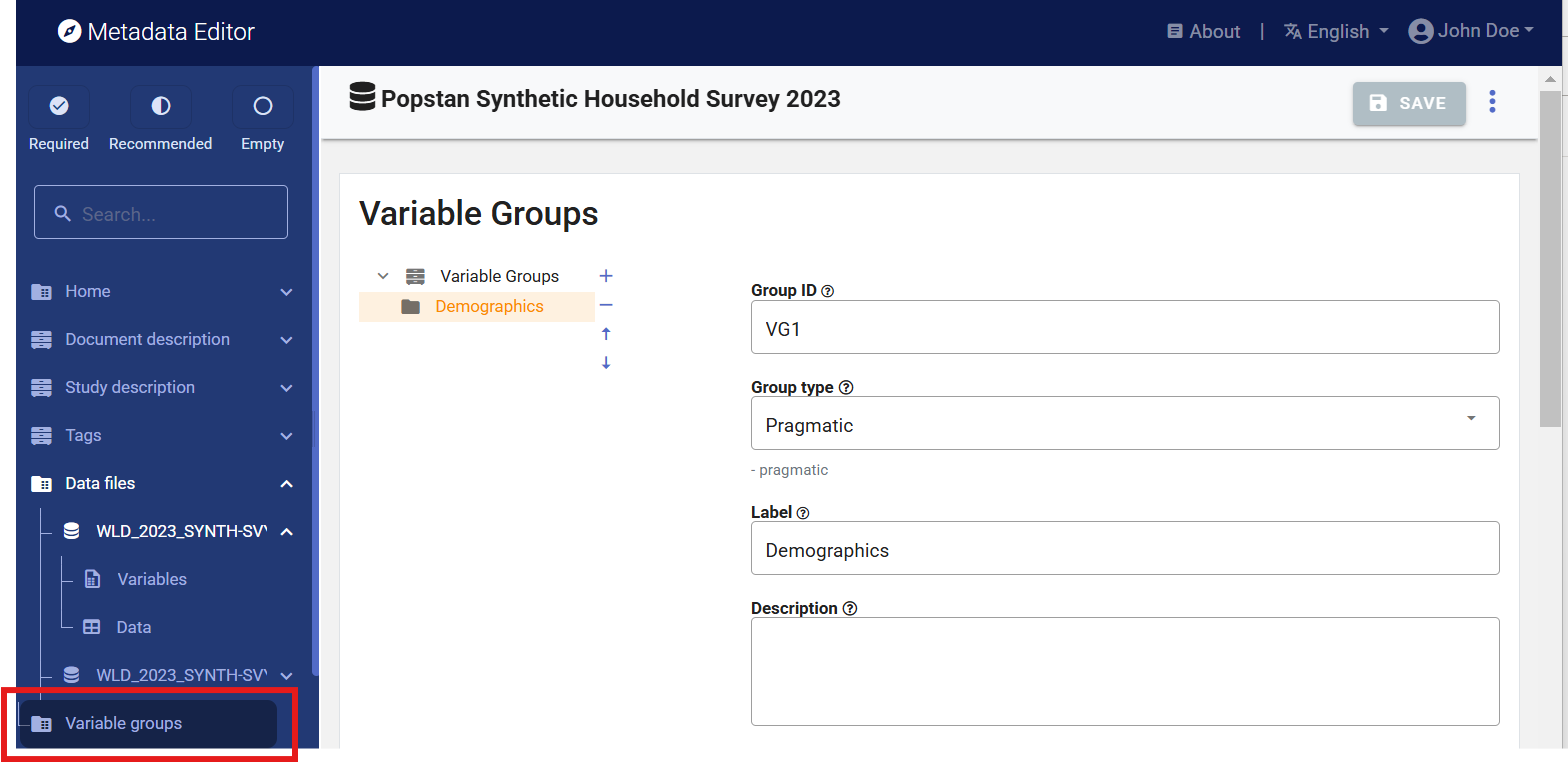

Variable groups. This optional section provides a way to organize variables into groups other than the data files, e.g., grouping of variables by theme. Variable grouping is intended to increase the convenience to users, and possibly the online discoverability of data (if useful group descriptions are provided).

In addition to these sections specific to the DDI Codebook metadata standard, the Metadata Editor includes sections common to all data types: External resources, Tags, DataCite, Provenance, and (optional) Administrative metadata.

The DDI-specific sections are described below. For instructions on sections common to all data types, refer to the chapter General instructions.

The description of metadata elements provided below corresponds to the metadata elements included in the default template XXXX provided with the Metadata Editor. The list, the groupings, and the label of the elements may differ if you use a different template.

Document description

The Document description section of the navigation tree contains elements intended to document the metadata being generated ("metadata about the metadata"). In the DDI jargon, the document is the DDI-compliant metadata file (XML or JSON) that contains the structured metadata related to the dataset. This section corresponds to the section Information on metadata in other standards.

All content in this section is optional; it is however good and recommended practice to document the metadata as precisely as possible. This information will not be useful to data users, but it will be to catalog administrators. When metadata is shared across catalogs (or automatically harvested from DDI-compliant data catalogs), the information entered in the Document description provides transparency and clarity on the origin of the metadata.

Study description

The Study description section of the DDI Codebook metadata standard contains the cataloguing and referential metadata. Two metadata elements are required in this container: the primary unique identifier of the dataset, and its title. Other elements are optional, unless they have been set as required in a custom-defined template.

The metadata entered for this section should be as comprehensive as possible. You enter metadata by selecting a section in the nagivation tree, and entering the information in the metadata entry page.

Note that all dates should preferably be entered in ISO format (YYYY-MM-DD or YYYY-MM or YYYY).

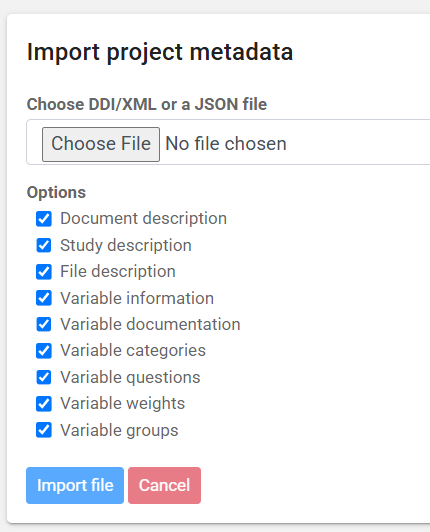

Refer to chapter General instructions for information on importing metadata from an existing project, and on copy/pasting content in fields that allow it.

By default, all content entered in the metadata entry page will consist of plain text (which will be saved in XML or JSON format). A few fields may allow entering formatted content (markdown, HTML, or LaTex formulas); which fields allow for such formating is determined in the template design.

We provide below a description of the metadata elements contained in the Study description section of the default metadata template provided in the Metadata Editor. Other templates may show a different selection, different labels, or present the elements in a different sequence. When you document a dataset, it is not expected that all these elements will be filled. Fill all required elements, all recommended elements when content can be made available, and fill as many as the other elements (the required and recommended elements will be those marked as required or recommended in the metadata standard or template).

In the list of metadata elements below, the key of each element in the metadata standard is provided between brackets next to the corresponding element's label in the template.

IDENTIFICATION

Title(title) This element is "Required". Provide here the full authoritative title for the study. Make sure to use a unique name for each distinct study. The title should indicate the time period covered. For example, in a country conducting monthly labor force surveys, the title of a study would be like “Labor Force Survey, December 2020”. When a survey spans two years (for example, a household income and expenditure survey conducted over a period of 12 months from June 2020 to June 2021), the range of years can be provided in the title, for example “Household Income and Expenditure Survey 2020-2021”. The title of a survey should be its official name as stated on the survey questionnaire or in other study documents (report, etc.). Including the country name in the title is optional (another metadata element is used to identify the reference countries). Pay attention to the consistent use of capitalization in the title.Subtitle(sub_title) Thesub-titleis a secondary title used to amplify or state certain limitations on the main title, for example to add information usually associated with a sequential qualifier for a survey. For example, we may have “[country] Universal Primary Education Project, Impact Evaluation Survey 2007” astitle, and “Baseline dataset” assub-title. Note that this information could also be entered as a Title with no Subtitle: “[country] Universal Primary Education Project, Impact Evaluation Survey 2007 - Baseline dataset”.Alternate title(alternate_title) Thealternate_titlewill typically be used to capture the abbreviation of the survey title. Many surveys are known and referred to by their acronym. The survey reference year(s) may be included. For example, the "Demographic and Health Survey 2012" would be abbreviated as "DHS 2012", or the "Living Standards Measurement Study 2020-2012" as "LSMS 2020-2021".Translated title(translated_title) In countries with more than one official language, a translation of the title may be provided here. Likewise, the translated title may simply be a translation into English from a country’s own language. Special characters should be properly displayed, such as accents and other stress marks or different alphabets.Primary ID(idno)idnois the primary identifier of the dataset. It is a unique identification number used to identify the study (survey, census or other). A unique identifier is required for cataloguing purpose, so this element is declared as "Required". The identifier will allow users to cite the dataset properly. The identifier must be unique within the catalog. Ideally, it should also be globally unique; the recommended option is to obtain a Digital Object Identifier (DOI) for the study. Alternatively, theidnocan be constructed by an organization using a consistent scheme. The scheme could for example be “catalog-country-study-year-version”, where country is the 3-letter ISO country code, producer is the abbreviation of the producing agency, study is the study acronym, year is the reference year (or the year the study started), version is a version number. Using that scheme, the Uganda 2005 Demographic and Health Survey for example would have the followingidno(where “MDA” stand for “My Data Archive”): MDA_UGA_DHS_2005_v01. Note that the schema allows you to provide more than one identifier for a same study (in elementidentifiers); a catalog-specific identifier is thus not incompatible with a globally unique identifier like a DOI. The identifier should not contain blank spaces.Other identifiers(identifiers) This repeatable element is used to enter identifiers (IDs) other than theidnoentered in the Title statement. It can for example be a Digital Object Identifier (DOI). Theidnocan be repeated here (theidnoelement does not provide atypeparameter; if a DOI or other standard reference ID is used asidno, it is recommended to repeat it here with the identification of itstype).Type(type) The type of unique ID, e.g. "DOI".Identifier(identifier) The identifier itself.

Study type(series_name) The name of the series to which the study belongs. For example, "Living Standards Measurement Study (LSMS)" or "Demographic and Health Survey (DHS)" or "Multiple Indicator Cluster Survey VII (MICS7)". A description of the series can be provided in the element "series_info".Series information(series_info) A study may be repeated at regular intervals (such as an annual labor force survey), or be part of an international survey program (such as the MICS, DHS, LSMS and others). The series statement provides information on the series. the element is a brief description of the characteristics of the series, including when it started, how many rounds were already implemented, and who is in charge would be provided here.

VERSION

Version name(version) The version number, also known as release or edition.Version date(version_date) The ISO 8601 standard for dates (YYYY-MM-DD) is recommended for use with the "date" attribute.Version responsibility(version_resp) The person(s) or organization(s) responsible for this version of the study.Notes on version(version_notes) Version notes should provide a brief report on the changes made through the versioning process. The note should indicate how this version differs from other versions of the same dataset.

OVERVIEW

Abstract(abstract) An un-formatted summary describing the purpose, nature, and scope of the data collection, special characteristics of its contents, major subject areas covered, and what questions the primary investigator(s) attempted to answer when they conducted the study. The summary should ideally be between 50 and 5000 characters long. The abstract should provide a clear summary of the purposes, objectives and content of the survey. It should be written by a researcher or survey statistician aware of the study. Inclusion of this element is strongly recommended.Kind of data(data_kind) This field describes the main type of microdata generated by the study: survey data, census/enumeration data, aggregate data, clinical data, event/transaction data, program source code, machine-readable text, administrative records data, experimental data, psychological test, textual data, coded textual, coded documents, time budget diaries, observation data/ratings, process-produced data, etc. A controlled vocabulary should be used as this information may be used to build facets (filters) in a catalog user interface.Unit of analysis(analysis_unit) A study can have multiple units of analysis. This field will list the various units that can be analyzed. For example, a Living Standard Measurement Study (LSMS) may have collected data on households and their members (individuals), on dwelling characteristics, on prices in local markets, on household enterprises, on agricultural plots, and on characteristics of health and education facilities in the sample areas.

SCOPE

Keywords(keywords) Keywords are words or phrases that describe salient aspects of a data collection's content. The addition of keywords can significantly improve the discoverability of data. Keywords can summarize and improve the description of the content or subject matter of a study. For example, keywords "poverty", "inequality", "welfare", and "prosperity" could be attached to a household income survey used to generate poverty and inequality indicators (for which these keywords may not appear anywhere else in the metadata). A controlled vocabulary can be employed. Keywords can be selected from a standard thesaurus, preferably an international, multilingual thesaurus.Keyword(keyword) A keyword (or phrase).Vocabulary(vocab) The controlled vocabulary from which the keyword is extracted, if any.URL(uri) The URI of the controlled vocabulary used, if any.

Topics(topics) Thetopicsfield indicates the broad substantive topic(s) that the study covers. A topic classification facilitates referencing and searches in on-line data catalogs.Topic(**) The label of the topic. Topics should be selected from a standard controlled vocabulary such as the Council of European Social Science Data Archives (CESSDA) Topic Classification.Vocabulary(vocab) The specification (name including the version) of the controlled vocabulary in use.URL(uri) A link (URL) to the controlled vocabulary website.

UNIVERSE AND GEOGRAPHIC COVERAGE

Universe(universe) The universe is the group of persons (or other units of observations, like dwellings, facilities, or other) that are the object of the study and to which any analytic results refer. The universe will rarely cover the entire population of the country. Sample household surveys, for example, may not cover homeless, nomads, diplomats, community households. Population censuses do not cover diplomats. Facility surveys may be limited to facilities of a certain type (e.g., public schools). Try to provide the most detailed information possible on the population covered by the survey/census, focusing on excluded categories of the population. For household surveys, age, nationality, and residence commonly help to delineate a given universe, but any of a number of factors may be involved, such as sex, race, income, veteran status, criminal convictions, etc. In general, it should be possible to tell from the description of the universe whether a given individual or element (hypothetical or real) is a member of the population under study.Country(nation) Indicates the country or countries (or "economies", or "territories") covered in the study (but not the sub-national geographic areas). If the study covers more than one country, they will be entered separately.Name(name) The country name, even in cases where the study does not cover the entire country.Code(abbreviation) Theabbreviationwill contain a country code, preferably the 3-letter ISO 3166-1 country code.

Geographic coverage(geog_coverage) Information on the geographic coverage of the study. This includes the total geographic scope of the data, and any additional levels of geographic coding provided in the variables. Typical entries will be "National coverage", "Urban areas", "Rural areas", "State of ...", "Capital city", etc. This does not describe where the data were collected; it describes which area the data are representative of. This means for example that a sample survey could be declared as having a national coverage even if some districts of the country where not included in the sample, as long as the sample is nationally representative.Geographic coverage notes(geog_coverage_notes) Additional information on the geographic coverage of the study entered as a free text field.Geographic unit(geog_unit) Describes the levels of geographic aggregation covered by the data. Particular attention must be paid to include information on the lowest geographic area for which data are representative.Bounding box(bbox) This element is used to define one or multiple bounding box(es), which are the rectangular fundamental geometric description of the geographic coverage of the data. A bounding box is defined by west and east longitudes and north and south latitudes, and includes the largest geographic extent of the dataset's geographic coverage. The bounding box provides the geographic coordinates of the top left (north/west) and bottom-right (south/east) corners of a rectangular area. This element can be used in catalogs as the first pass of a coordinate-based search. This element is optional, but if thebound_polyelement (see below) is used, then thebboxelement must be included.West(west) West longitude of the bounding box.East(east) East longitude of the bounding box.South(south) South latitude of the bounding box.North(north) North latitude of the bounding box.

Bounding polygon(bound_poly) Thebboxmetadata element (see above) describes a rectangular area representing the entire geographic coverage of a dataset. The elementbound_polyallows for a more detailed description of the geographic coverage, by allowing multiple and non-rectangular polygons (areas) to be described. This is done by providing list(s) of latitude and longitude coordinates that define the area(s). It should only be used to define the boundaries of the covered areas. This field is intended to enable a refined coordinate-based search, not to actually map an area. Note that if thebound_polyelement is used, then the elementbboxMUST be present as well, and all points enclosed by thebound_polyMUST be contained within the bounding box defined inbbox.Latitude(lat) The latitude of the coordinate.Longitude(lon) The longitude of the coordinate.

PRODUCERS AND SPONSORS

Primary producer/investigator(authoring_entity) The name and affiliation of the person, corporate body, or agency responsible for the study’s substantive and intellectual content (the "authoring entity" or “primary investigator”). Generally, in a survey, the authoring entity will be the institution implementing the survey. Repeat the element for each authoring entity, and enter theaffiliationwhen relevant. If various institutions have been equally involved as main investigators, then should all be listed. This only includes the agencies responsible for the implementation of the study, not sponsoring agencies or entities providing technical assistance (for which other metadata elements are available). The order in which authoring entities are listed is discretionary. It can be alphabetic or by significance of contribution. Individual persons can also be mentioned, if not prohibited by privacy protection rules.Name(name) The name of the person, corporate body, or agency responsible for the work's substantive and intellectual content. The primary investigator will in most cases be an institution, but could also be an individual in the case of small-scale academic surveys. If persons are mentioned, use the appropriate format of Surname, First name.Affiliation(affiliation) The affiliation of the person, corporate body, or agency mentioned inname.

Other producers(producers) This field is provided to list other interested parties and persons that have played a significant but not the leading technical role in implementing and producing the data (which will be listed inauthoring_entity), and not the financial sponsors (which will be listed infunding_agencies).Name(name) The name of the person or organization.Abbreviation(abbr) The official abbreviation of the organization mentioned inname.Affiliation(affiliation) The affiliation of the person or organization mentioned inname.Role(role) A succinct description of the specific contribution by the person or organization in the production of the data.

Funding agencies(funding_agencies) The source(s) of funds for the production of the study. If different funding agencies sponsored different stages of the production process, use theroleattribute to distinguish them.Name(name) The name of the funding agency.Abbreviation(abbr) The abbreviation (acronym) of the funding agency mentioned inname.Grant number(grant) The grant number. If an agency has provided more than one grant, list them all separated with a ";".Role(role) The specific contribution of the funding agency mentioned inname. This element is used when multiple funding agencies are listed to distinguish their specific contributions.

Budget(study_budget) This is a free-text field, not a structured element. The budget of a study will ideally be described by budget line. The currency used to describe the budget should be specified. This element can also be used to document issues related to the budget (e.g., documenting possible under-run and over-run).Other contributors(oth_id) This element is used to acknowledge any other people and organizations that have in some form contributed to the study. This does not include other producers which should be listed inproducers, and financial sponsors which should be listed in the elementfunding_agencies.Name(name) The name of the person or organization.Affiliation(affiliation) The affiliation of the person or organization mentioned inname.Role(role) A brief description of the specific role of the person or organization mentioned inname.

STUDY AUTHORIZATION

Provides structured information on the agency that authorized the study, the date of authorization, and an authorization statement. This element will be used when a special legislation is required to conduct the data collection (for example a Census Act) or when the approval of an Ethics Board or other body is required to collect the data.

Authorization date(date) The date, preferably entered in ISO 8601 format (YYYY-MM-DD), when the authorization to conduct the study was granted.Authorizing agency(agency) Identification of the agency that authorized the study.Name(name) Name of the agent or agency that authorized the study.Affiliation(affiliation) The institutional affiliation of the authorizing agent or agency mentioned inname.Abbreviation(abbr) The abbreviation of the authorizing agent's or agency's name.

Authorization statement(authorization_statement) The text of the authorization (or a description and link to a document or other resource containing the authorization statement).

SAMPLING

Sample frameA description of the sample frame used for identifying the population from which the sample was taken. For example, a telephone book may be a sample frame for a phone survey. Or the listing of enumeration areas (EAs) of a population census can provide a sample frame for a household survey. In addition to the name, label and text describing the sample frame, this structure lists who maintains the sample frame, the period for which it is valid, a use statement, the universe covered, the type of unit contained in the frame as well as the number of units available, the reference period of the frame and procedures used to update the frame.Name(name) The name (title) of the sample frame.Valid periods(valid_period) Defines a time period for the validity of the sampling frame, using a list of events and dates.Event(event) The event can for example bestartorend.Date(date) The date corresponding to the event, entered in ISO 8601 format: YYYY-MM-DD.

Custodian(custodian) Custodian identifies the agency or individual responsible for creating and/or maintaining the sample frame.Universe(universe) A description of the universe of population covered by the sample frame. Age,nationality, and residence commonly help to delineate a given universe, but any of a number of factors may be involved, such as sex, race, income, etc. The universe may consist of elements other than persons, such as housing units, court cases, deaths, countries, etc. In general, it should be possible to tell from the description of the universe whether a given individual or element (hypothetical or real) is included in the sample frame.Unit type(unit_type) The type of the sampling frame unit (for example "household", or "dwelling").Units are primary(is_primary) This boolean attribute (true/false) indicates whether the unit is primary or not.Number of units(num_of_units) The number of units in the sample frame, possibly with information on its distribution (e.g. by urban/rural, province, or other).Update procedure(update_procedure) This element is used to describe how and with what frequency the sample frame is updated. For example: "The lists and boundaries of enumeration areas are updated every ten years at the occasion of the population census cartography work. Listing of households in enumeration areas are updated as and when needed, based on their selection in survey samples."Reference periods(reference_period) Indicates the period of time in which the sampling frame was actually used for the study in question. Use ISO 8601 date format to enter the relevant date(s).Event(event) Indicates the type of event that the date corresponds to, e.g., "start", "end", "single".Date(date) The relevant date in ISO 8601 date/time format.

Sampling procedure(sampling_procedure) This field only applies to sample surveys. It describes the type of sample and sample design used to select the survey respondents to represent the population. This section should include summary information that includes (but is not limited to): sample size (expected and actual) and how the sample size was decided; level of representation of the sample; sample frame used, and listing exercise conducted to update it; sample selection process (e.g., probability proportional to size or over sampling); stratification (implicit and explicit); design omissions in the sample; strategy for absent respondents/not found/refusals (replacement or not). Detailed information on the sample design is critical to allow users to adequately calculate sampling errors and confidence intervals for their estimates. To do that, they will need to be able to clearly identify the variables in the dataset that represent the different levels of stratification and the primary sampling unit (PSU).

In publications and reports, the description of sampling design often contains complex formulas and symbols. As the XML and JSON formats used to store the metadata are plain text files, they cannot contain these complex representations. You may however provide references (title/author/date) to documents where such detailed descriptions are provided, and make sure that the documents (or links to the documents) are provided in the catalog where the survey metadata are published.Deviations from sample design(sampling_deviation) Sometimes the reality of the field requires a deviation from the sampling design (for example due to difficulty to access to zones due to weather problems, political instability, etc). If for any reason, the sample design has deviated, this can be reported here. This element will provide information indicating the correspondence as well as the possible discrepancies between the sampled units (obtained) and available statistics for the population (age, sex-ratio, marital status, etc.) as a whole.Response rates(response_rate) The response rate is the percentage of sample units that participated in the survey based on the original sample size. Omissions may occur due to refusal to participate, impossibility to locate the respondent, or other reason. This element is used to provide a narrative description of the response rate, possibly by stratum or other criteria, and if possible with an identification of possible causes. If information is available on the causes of non-response (refusal/not found/other), it can be reported here. This field can also be used to describe non-responses in population censuses.Weighting(weight) This field only applies to sample surveys. The use of sampling procedures may make it necessary to apply weights to produce accurate statistical results. Describe here the criteria for using weights in analysis of a collection, and provide a list of variables used as weighting coefficient. If more than one variable is a weighting variable, describe how these variables differ from each other and what the purpose of each one of them is.

SURVEY INSTRUMENT

Questionnaires(research_instrument) The research instrument refers to the questionnaire or form used for collecting data. The following should be mentioned:- List of questionnaires and short description of each (all questionnaires must be provided as External Resources)

- In what language(s) was/were the questionnaire(s) available?

- Information on the questionnaire design process (based on a previous questionnaire, based on a standard model questionnaire, review by stakeholders). If a document was compiled that contains the comments provided by the stakeholders on the draft questionnaire, or a report prepared on the questionnaire testing, a reference to these documents can be provided here.

Instrument development(instru_development) Describe any development work on the data collection instrument. This may include a description of the review process, standards followed, and a list of agencies/people consulted.Notes on methodology(method_notes) This element is provided to capture any additional relevant information on the data collection methodology, which could not fit in the previous metadata elements.

DATA COLLECTION

Dates of data collection(coll_dates) Contains the date(s) when the data were collected, which may be different from the date the data refer to (seetime_periodsabove). For example, data may be collected over a period of 2 weeks (coll_dates) about household expenditures during a reference week (time_periods) preceding the beginning of data collection. Use the event attribute to specify the "start" and "end" for each period entered.Start(start) Date the data collection started (for the specified cycle, if any). Enter the date in ISO 8601 format (YYYY-MM-DD or YYYY-MM or YYYY).End(end) Date the data collection ended (for the specified cycle, if any). Enter the date in ISO 8601 format (YYYY-MM-DD or YYYY-MM or YYYY).Cycle(cycle) Identification of the cycle of data collection. Thecycleattribute permits specification of the relevant cycle, wave, or round of data. For example, a household consumption survey could visit households in four phases (one per quarter). Each quarter would be a cycle, and the specific dates of data collection for each quarter would be entered.

Time method(time_method) The time method or time dimension of the data collection. A controlled vocabulary can be used. The entries for this element may include "panel survey", "cross-section", "trend study", or "time-series".Frequency(frequency) For data collected at more than one point in time, the frequency with which the data were collected.Time periods(time_periods) This refers to the time period (also known as span) covered by the data, not the dates of data collection.Start(start) The start date for the cycle being described. Enter the date in ISO 8601 format (YYYY-MM-DD or YYYY-MM or YYYY).End(end**) The end date for the cycle being described. Enter the date in ISO 8601 format (YYYY-MM-DD or YYYY-MM or YYYY). Indicate open-ended dates with two decimal points (..)Cycle(cycle) Thecycleattribute permits specification of the relevant cycle, wave, or round of data.

Data collection sources(sources) A description of sources used for developing the methodology of the data collection.Name(name) The name and other information on the source. For example, "United States Internal Revenue Service Quarterly Payroll File"Origin(origin) For historical materials, information about the origin(s) of the sources and the rules followed in establishing the sources should be specified. This may not be relevant to survey data.Characteristics(characteristics) Assessment of characteristics and quality of source material. This may not be relevant to survey data.

Mode of data collection(coll_mode) The mode of data collection is the manner in which the interview was conducted or information was gathered. Ideally, a controlled vocabulary will be used to constrain the entries in this field (which could include items like "telephone interview", "face-to-face paper and pen interview", "face-to-face computer-assisted interviews (CAPI)", "mail questionnaire", "computer-aided telephone interviews (CATI)", "self-administered web forms", "measurement by sensor", and others.

This is a repeatable field, as some data collection activities implement multi-mode data collection (for example, a population census can offer respondents the options to submit information via web forms, telephone interviews, mailed forms, or face-to-face interviews. Note that in the API description (see screenshot above), the element is described as having type "null", not {}. This is due to the fact that the element can be entered either as a list (repeatable element) or as a string.Data collectors(data_collectors) The entity (individual, agency, or institution) responsible for administering the questionnaire or interview or compiling the data.Name(name) In most cases, we will record here the name of the agency, not the name of interviewers. Only in the case of very small-scale surveys, with a very limited number of interviewers, the name of persons will be included as well.Affiliation(affiliation) The affiliation of the data collector mentioned inname.Abbreviation(abbr) The abbreviation given to the agency mentioned inname.Role(role) The specific role of the person or agency mentioned inname.Collector training(collector_training) Describes the training provided to data collectors including interviewer training, process testing, compliance with standards etc. This set of elements is repeatable, to capture different aspects of the training process.Type(type) The type of training being described. For example, "Training of interviewers", "Training of controllers", "Training of cartographers", "Training on the use of tablets for data collection", etc.Training(training) A brief description of the training. This may include information on the dates and duration, audience, location, content, trainers, issues, etc.

Control operations(control_operations) This element will provide information on the oversight of the data collection, i.e. on methods implemented to facilitate data control performed by the primary investigator or by the data archive.Supervision(act_min) A summary of actions taken to minimize data loss. This includes information on actions such as follow-up visits, supervisory checks, historical matching, estimation, etc. Note that this element does not have to include detailed information on response rates, as a specific metadata element is provided for that purpose in sectionanalysis_info / response_rate(see below).Notes on data collection(coll_situation) A description of noteworthy aspects of the data collection situation. This element is provided to document any specific situations, observations, or events that occurred during data collection. Consider stating such items like:- Was a training of enumerators held? (elaborate)

- Was a pilot survey conducted?

- Did any events have a bearing on the data quality? (elaborate)

- How long did an interview take on average?

- In what language(s) were the interviews conducted?

- Were there any corrective actions taken by management when problems occurred in the field?

DATA PROCESSING

Data processing(data_processing) This element is used to describe how data were electronically captured (e.g., entered in the field, in a centralized manner by data entry clerks, captured electronically using tablets and a CAPI application, via web forms, etc.). Information on devices and software used for data capture can also be provided here. Other data processing procedures not captured elsewhere in the documentation can be described here (tabulation, etc.)Type(type) The type attribute supports better classification of this activity, including the optional use of a controlled vocabulary. The vocabulary could include options like “data capture”, “data validation”, “variable derivation”, “tabulation”, “data visualizations”, anonymization“, ”documentation", etc.Description(description) A description of a data processing task.

Cleaning operations(cleaning_operations) A description of the methods used to clean or edit the data, e.g., consistency checking, wild code checking, etc. The data editing should contain information on how the data was treated or controlled for in terms of consistency and coherence. This item does not concern the data entry phase but only the editing of data whether manual or automatic. It should provide answers to questions like: Was a hot deck or a cold deck technique used to edit the data? Were corrections made automatically (by program), or by visual control of the questionnaire? What software was used? If materials are available (specifications for data editing, report on data editing, programs used for data editing), they should be listed here and provided as external resources in data catalogs (the best documentation of data editing consists of well-documented reproducible scripts).

STUDY ACTIVITIES

This section is used to describe the process that led to the production of the final output of the study, from its inception/design to the dissemination of the final output.

Each activity will be documented separately. The Generic Statistical Business Process Model (GSBPM) provides a useful decomposition of such a process, which can be used to list the activities to be described. This is a repeatable set of metadata elements; each activity should be documented separately.

Type(activity_type) The type of activity. A controlled vocabulary can be used, possibly comprising the main components of the GSBPM:{Needs specification, Design, Build, Collect, Process, Analyze, Disseminate, Evaluate}).Description(activity_description) A brief description of the activity.Participants(participants) A list of participants (persons or organizations) in the activity. This is a repeatable set of elements; each participant can be documented separately.Name(name) Name of the participating person or organization.Affiliation(affiliation) Affiliation of the person or organization mentioned inname.Role(role) Specific role (participation) of the person or organization mentioned inname.

Resources(resources) A description of the data sources and other resources used to implement the activity.Name(name) The name of the resource.Origin(origin) The origin of the resource mentioned inname.Characteristics(characteristics) The characteristics of the resource mentioned inname.

Activity outcome(outcome) Description of the main outcome of the activity.

QUALITY STANDARDS

This section lists the specific standards complied with during the execution of this study, and provides the option to formulate a general statement on the quality of the data. Any known quality issue should be reported here. Such issues are better reported by the data producer or curator, not left to the secondary analysts to discover. Transparency in reporting quality issues will increase credibility and reputation of the data provider.

Standard compliance(compliance_description) A statement on compliance with standard quality assessment procedures. The list of these standards can be documented in the next element,standards.Quality standards(standards) An itemized list of quality standards complied with during the execution of the study.Standard(name) The name of the quality standard, if such a standard was used. Include the date when the standard was published, and the version of the standard with which the study is compliant, and the "URI" attribute includes .Producer(producer) The producer of the quality standard mentined inname.

Other quality statement(other_quality_statement) Any additional statement on the quality of the data, entered as free text. This can be independent of any particular quality standard.

DATA APPRAISAL

Sampling errors(sampling_error_estimates) Sampling errors are intended to measure how precisely one can estimate a population value from a given sample. For sampling surveys, it is good practice to calculate and publish sampling error. This field is used to provide information on these calculations (not to provide the sampling errors themselves, which should be made available in publications or reports). Information can be provided on which ratios/indicators have been subjected to the calculation of sampling errors, and on the software used for computing the sampling error. Reference to a report or other document where the results can be found can also be provided.Ex-post evaluationEx-post evaluations are frequently done within large statistical or research organizations, in particular when a study is intended to be repeated. Such evaluations are recommended by the Generic Statistical Business Process Model (GSBPM). This section of the schema is used to describe the evaluation procedures and their outcomes.Evaluation type(type) Thetypeattribute identifies the type of evaluation with or without the use of a controlled vocabulary.Evaluation process(evaluation_process) A description of the evaluation process. This may include information on the dates the evaluation was conducted, cost/budget, relevance, institutional or legal arrangements, et.Evaluators(evaluator) The evaluator element identifies the person(s) and/or organization(s) involved in the evaluation.Bame(name) The name of the person or organization involved in the evaluation.Abbreviation(abbr) An abbreviation for the organization mentioned inname.Affiliation(affiliation) The affiliation of the individual or organization mentioned inname.Role(role) The specific role played by the individual or organization mentioned innamein the evaluation process.

Completion date(completion_date) The date the ex-post evaluation was completed.Evaluation outcomes(outcomes) A description of the outcomes of the evaluation. It may include a reference to an evaluation report.

Other data appraisal(data_appraisal) This section is used to report any other action taken to assess the reliability of the data, or any observations regarding data quality. Describe here issues such as response variance, interviewer and response bias, question bias, etc. For a population census, this can include information on the main results of a post enumeration survey (a report should be provided in external resources and mentioned here); it can also include relevant comparisons with data from other sources that can be used as benchmarks.

DATA AVAILABILITY

This section describes the access conditions and terms of use for the dataset. This set of elements should be used when the access conditions are well-defined and are unlikely to change. An alternative option is to document the terms of use in the catalog where the data will be published, instead of "freezing" them in a metadata file.

Location of dataset(access_place) Name of the location where the data collection is currently stored.URL for location of dataset(access_place_url) The URL of the website of the location where the data collection is currently stored.Archive where study is originally stored(original_archive) Archive from which the data collection was obtained, if any (the originating archive). Note that the schema we propose provides an elementprovenance, which is not part of the DDI, that can be used to document the origin of a dataset.Extent of collection(coll_size) Extent of the collection. This is a summary of the number of physical files that exist in a collection. We will record here the number of files that contain data and note whether the collection contains other machine-readable documentation and/or other supplementary files and information such as data dictionaries, data definition statements, or data collection instruments. This element will rarely be used.Completeness(complete) This item indicates the relationship of the data collected to the amount of data coded and stored in the data collection. Information as to why certain items of collected information were not included in the data file stored by the archive should be provided here. Example: "Because of embargo provisions, data values for some variables have been masked. Users should consult the data definition statements to see which variables are under embargo." This element will rarely be used.Number of files(file_quantity) The total number of physical files associated with a collection. This element will rarely be used.Notes on data availability(notes) Additional information on the dataset availability, not included in one of the elements above.

DEPOSITOR INFORMATION

Depositor(depositor) The name of the person (or institution) who provided this study to the archive storing it.Name(name) The name of the depositor. It can be an individual or an organization.Abbreviation(abbr) The official abbreviation of the organization mentioned inname.Affiliation(affiliation) The affiliation of the person or organization mentioned inname.URL(uri) A URL to the depositor

Date of deposit(deposit_date) The date that the study was deposited with the archive that originally received it. The date should be entered in the ISO 8601 format (YYYY-MM-DD or YYYY-MM or YYYY). The exact date should be provided when possible.

DISTRIBUTOR INFORMATION

Distributors(distributors) The organization(s) designated by the author or producer to generate copies of the study output including any necessary editions or revisions.Name(name) The name of the distributor. It can be an individual or an organization.Abbreviation(abbr) The official abbreviation of the organization mentioned inname.Affiliation(affiliation) The affiliation of the person or organization mentioned inname.URL(uri) A URL to the ordering service or download facility on a Web site.

Date of distribution(distribution_date) The date that the study was made available for distribution/presentation. The date should be entered in the ISO 8601 format (YYYY-MM-DD or YYYY-MM or YYYY). The exact date should be provided when possible.

DATA ACCESS

Access authority(contact) Names and addresses of individuals responsible for the study. Individuals listed as contact persons will be used as resource persons regarding problems or questions raised by users.Name(name) The name of the person or organization that can be contacted.Affiliation(affiliation) The affiliation of the person or organization mentioned inname.URL(uri) A URL to the contact mentioned inname.Email(email) An email address for the contact mentioned inname.Confidentiality declaration(conf_dec) This element is used to determine if signing of a confidentiality declaration is needed to access a resource. We may indicate here what Affidavit of Confidentiality must be signed before the data can be accessed. Another option is to include this information in the next element (Access conditions). If there is no confidentiality issue, this field can be left blank.Required(required) The "required" attribute is used to aid machine processing of this element. The default specification is "yes".Text(txt) A statement on confidentiality and limitations to data use. This statement does not replace a more comprehensive data agreement (seeAccess condition). An example of statement could be the following: "Confidentiality of respondents is guaranteed by Articles N to NN of the National Statistics Act of [date]. Before being granted access to the dataset, all users have to formally agree:- To make no copies of any files or portions of files to which s/he is granted access except those authorized by the data depositor.

- Not to use any technique in an attempt to learn the identity of any person, establishment, or sampling unit not identified on public use data files.

- To hold in strictest confidence the identification of any establishment or individual that may be inadvertently revealed in any documents or discussion, or analysis.

- That such inadvertent identification revealed in her/his analysis will be immediately and in confidentiality brought to the attention of the data depositor."

Form ID(form_id) Indicates the number or ID of the confidentiality declaration form that the user must fill out.Form URL(form_url) The"form_urlelement is used to provide a link to an online confidentiality declaration form.

Access conditions(conditions) Indicates any additional information that will assist the user in understanding the access and use conditions of the data collection.Citation requirement(cit_req) A citation requirement that indicates the way that the dataset should be referenced when cited in any publication. Providing a citation requirement will guarantee that the data producer gets proper credit, and that results of analysis can be linked to the proper version of the dataset. The data access policy should explicitly mention the obligation to comply with the citation requirement. The citation should include at least the primary investigator, the name and abbreviation of the dataset, the reference year, and the version number. Include also a website where the data or information on the data is made available by the official data depositor. Ideally, the citation requirement will include a DOI (see the DataCite website for recommendations).Deposit requirement(deposit_req) Information regarding data users' responsibility for informing archives of their use of data through providing citations to the published work or providing copies of the manuscripts.Availability status(status) A statement of the data availability. An archive may need to indicate that a collection is unavailable because it is embargoed for a period of time, because it has been superseded, because a new edition is imminent, etc. This element will rarely be used.Special permissions(spec_perm) This element is used to determine if any special permissions are required to access a resource.Required(required) Therequiredis used to aid machine processing of this element. The default specification is "yes".Text(txt) A statement on the special permissions required to access the dataset.Form ID(form_id) The "form_id" indicates the number or ID of the special permissions form that the user must fill out.Form URL(form_url) Theform_urlis used to provide a link to a special on-line permissions form.

Restrictions(restrictions) Any restrictions on access to or use of the collection such as privacy certification or distribution restrictions should be indicated here. These can be restrictions applied by the author, producer, or distributor of the data. This element can for example contain a statement (extracted from the DDI documentation) like: "In preparing the data file(s) for this collection, the National Center for Health Statistics (NCHS) has removed direct identifiers and characteristics that might lead to identification of data subjects. As an additional precaution NCHS requires, under Section 308(d) of the Public Health Service Act (42 U.S.C. 242m), that data collected by NCHS not be used for any purpose other than statistical analysis and reporting. NCHS further requires that analysts not use the data to learn the identity of any persons or establishments and that the director of NCHS be notified if any identities are inadvertently discovered. Users ordering data are expected to adhere to these restrictions."Notes on access and use(notes) Any additional information related to data access that is not contained in the specific metadata elements provided in the section "Data access and use".

DISCLAIMER AND COPYRIGHT

Disclaimer(disclaimer) A disclaimer limits the liability that the data producer or data custodian has regarding the use of the data. A standard legal statement should be used for all datasets from a same agency. The following formulation could be used: The user of the data acknowledges that the original collector of the data, the authorized distributor of the data, and the relevant funding agency bear no responsibility for use of the data or for interpretations or inferences based upon such uses.Copyright(copyright) Any additional information related to data access that is not contained in the specific metadata elements provided in the section "Data access and use".

OTHER INFORMATION

Notes on the study (study_notes) This element can be used to provide additional information on the study which cannot be accommodated in the specific metadata elements of the schema, in the form of a free text field.

CONTACTS

Contacts(contact) Users of the data may need further clarification and information on the terms of use and conditions to access the data. This set of elements is used to identify the contact persons who can be used as resource persons regarding problems or questions raised by the user community.Name(name) Name of the person. Note that in some cases, it might be better to provide a title/function than the actual name of the person. Keep in mind that people do not stay forever in their position.Affiliation(affiliation) Affiliation of the person.Email(email) Theemailelement is used to indicate an email address for the contact individual mentioned inname. Ideally, a generic email address should be provided. It is easy to configure a mail server in such a way that all messages sent to the generic email address would be automatically forwarded to some staff members.URL(uri) URI for the person; it can be the URL of the organization the person belongs to.

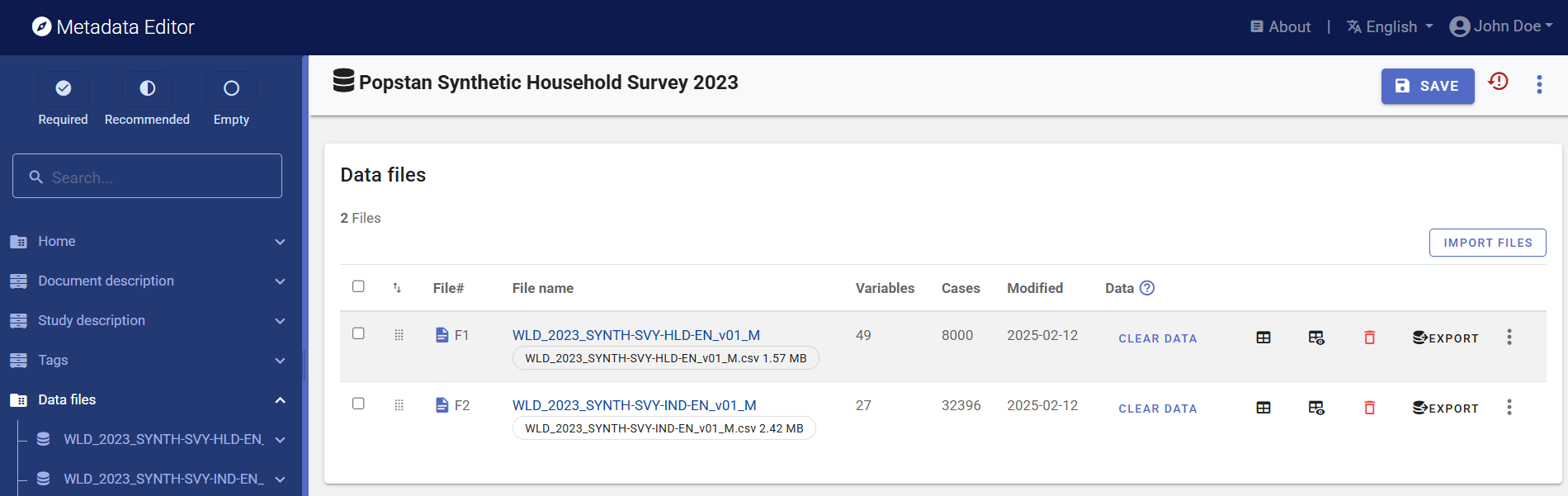

Data files

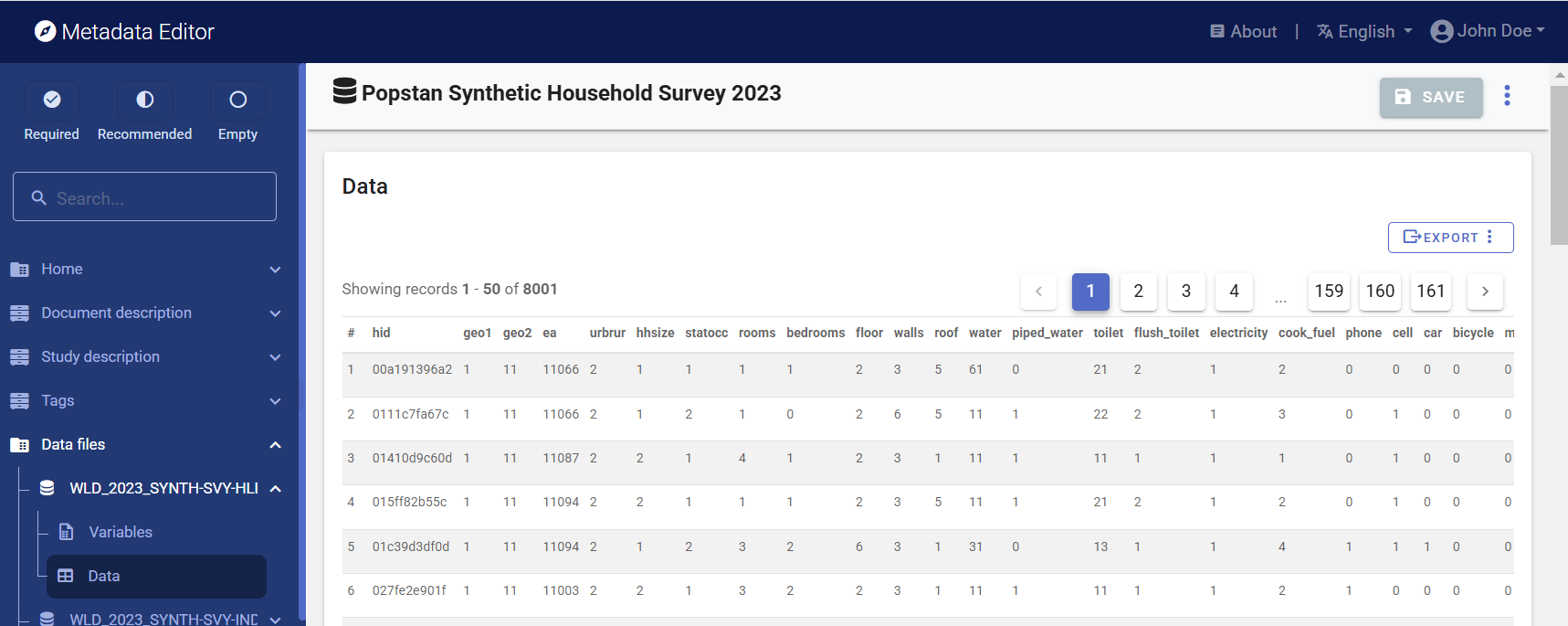

The Data files section of the DDI Metadata Editor is where structural metadata will be captured, including file-level and variable-level information.

Importing data files

Typically, the core of structural metadata will be automatically generated by importing the data files into the Metadata Editor. When no data file is available to be imported, this section will be left empty (in which case your metadata will be limited to descriptive and reference metadata), or a data dictionary can be entered manually, which may be a tedious process prone to errors.

To import data files, select Data files in the navigation tree, and click IMPORT in the Data files page. Select the file(s) to be imported.

The following formats are currently supported: Stata (.dta), SPSS (.sav), and CSV.

The Metadata Editor runs Python in the background (using the Pandas library). It will read the data files, and convert them to CSV format for internal use. The application will make a best guess to assign the type of each variable (continuous or categorical), extract the variable names and labels, extract the value labels, and generate (unweighted) summary statistics for all variables. The import process will result in a data dictionary for each data file.

The imported data files will now be listed in the navigation tree. Summary information on the imported data files is displayed in the Data files page.

IMPORTANT: The CSV version of the data will be stored on the server that hosts the Metadata Editor. All collaborators on your project will be able to see and download the data. If the data are confidential and sensitive, they may be removed from the server at any time. See Removing data files from server below.

Reordering data files

If the order in which the files appear in the Data files page needs to be modified, use the handle icons to move the files up or down (drag and drop).

Exporting data files

At any time, you may export the imported data files, to any of the supported format (currently, Stata, SPSS, and CSV). Typically, you will want to export the data files AFTER editing the metadata (variable and value labels) to ensure full consistency between the data and the metadata.

If you made any change to the metadata (editing variable and/or value labels), exporting the data files before sharing them with the metadata will ensure that the data and the metadata are fully consistent. But you should ALWAYS preserve a copy of the original datasets that you imported into the Metadata Editor.

Deleting data files

Data files can be deleted from the project. When you delete a data file, all related metadata will be removed, and the data for the deleted file will also be removed from the server.

Removing data files from server

Imported data files are by default stored in the web server where the Metadata Editor has been installed. If you share a project with other users, you may grant them access to the data. If your files cannot be shared, you have the option to remove them from the server after the metadata extraction has been completed.

If you want to remove the data from the server, use the Clear data option provided in the Data files page. Note that after removing the data from the server, you will not be able to update the summary statistics anymore. If you plan to edit the value labels, or apply weights to generate weighted summary statistics, do that BEFORE you clear the data files from the server. And if you want to ensure that no collaborator has access to the data, clear the data files BEFORE sharing the project or adding it to a collection.

If you are not allowed to upload your data files to the server, one option is to extract one observation from each data file, anonymize it, and importing these single-observation files into the Metadata Editor. By doing this, the data dictionary will be generated as if you had imported the full dataset. But the summary statistics will be invalid. YOu will have to remove all summary statistics for all variables. See the section Variables below.

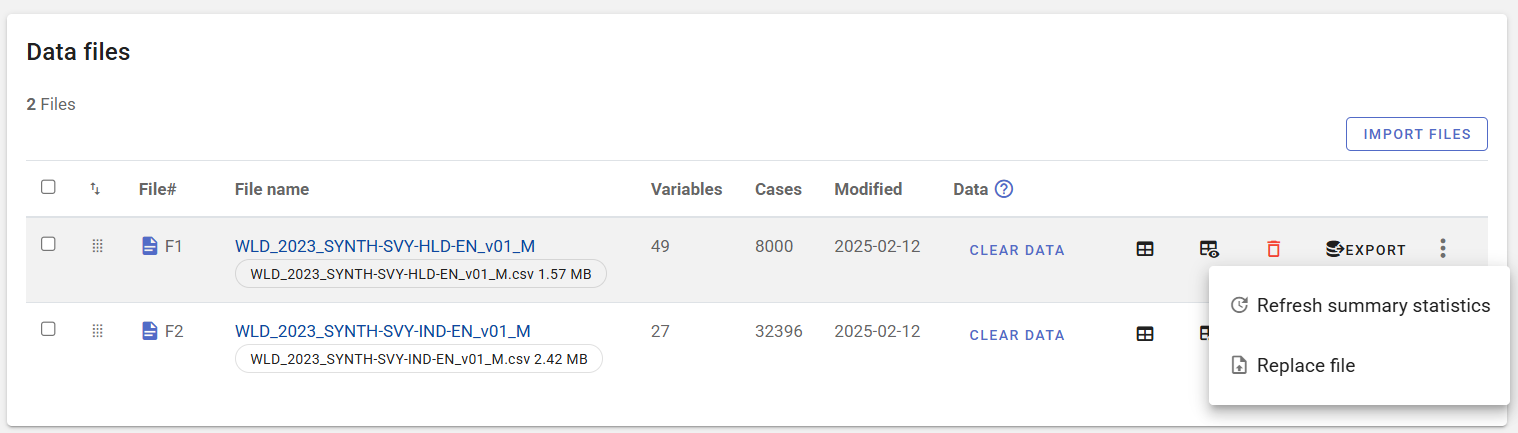

Replacing data files

You can replace a data file by selecting the option Replace file in the option menu for a data file in the Data files page. You can only replace a data file with a data file that has the exact same structure (same variables, in the same order). The application will replace the data, but leave the metadata already entered in the Metadata Editor untouched. Replacing a data file is thus a solution to "refresh the data" (and summary statistics) without losing updated metadata.

Refreshing summary statistics

Some actions on data files (modifying Categories description or applying weighting coefficients) will require that the summary statistics be updated. The Metadata Editor does not automatically update statistics each time a change is made. Instead, it will add visual clues (red icons) that the data does not fully correspond to the metadata anymore, and call for a refreshing of summary statistics. The Refresh summary statistics in the option menu next to the data file description (in the Data files page) serves this purpose. Another option to refresh statistics is to click on the Refresh stats icon in the Variable list frame of the Variable page (see Refresh statistics below).

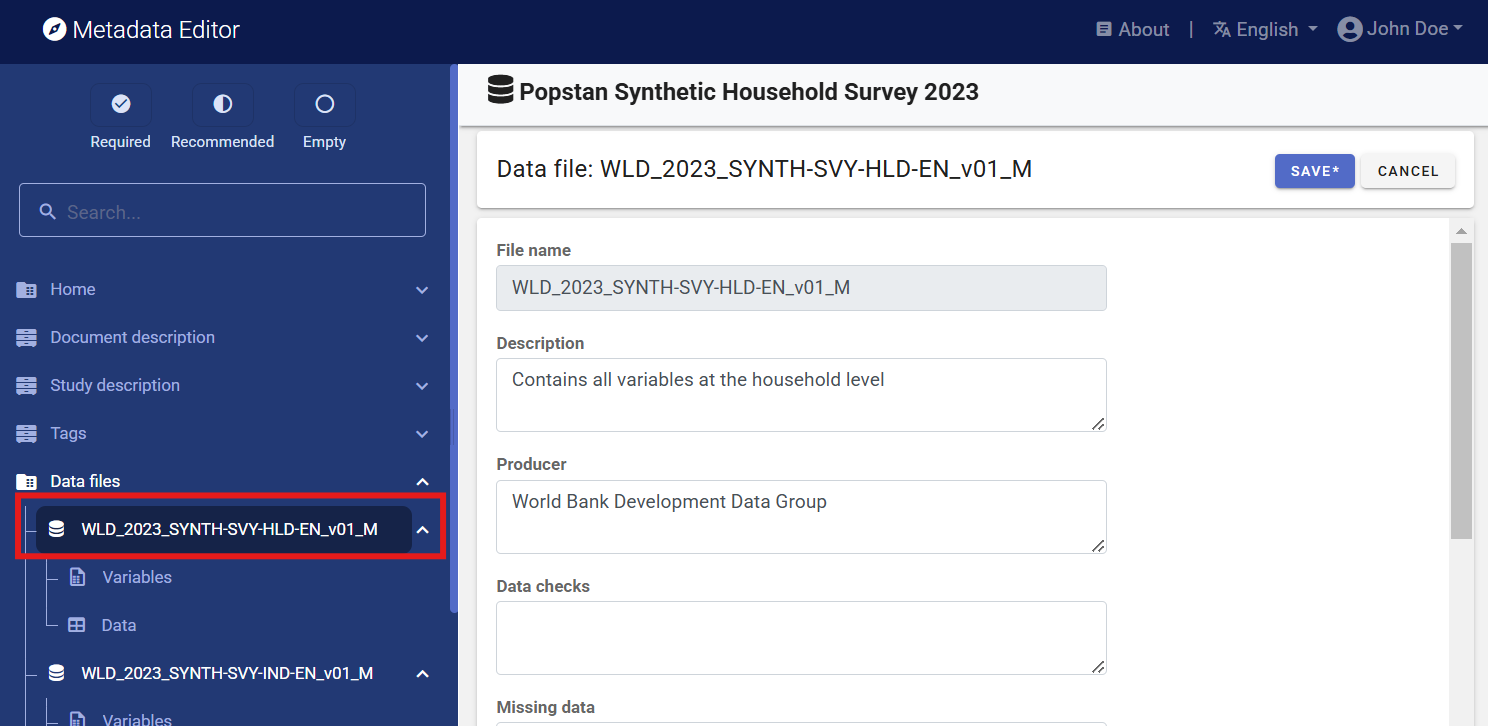

File description

Clicking on a file name in the navigation tree will open the File description page where the information on the data file can be entered. Some information like the file name, number of variables and observations, are automatically generated when data files are imported.

Description(description) Thefile_idandfile_nameelements provide limited information on the content of the file. Thedescriptionelement is used to provide a more detailed description of the file content. This description should clearly distinguish collected variables and derived variables. It is also useful to indicate the availability in the data file of some particular variables such as the weighting coefficients. If the file contains derived variables, it is good practice to refer to the computer program that generated it. Information about the data file(s) that comprises a collection.Producer(producer) The name of the agency that produced the data file. Most data files will have been produced by the survey primary investigator. In some cases however, auxiliary or derived files from other producers may be released with a data set. This may for example be a file containing derived variables generated by a researcher.Data checks(data_checks) Use this element if needed to provide information about the types of checks and operations that have been performed on the data file to make sure that the data are as correct as possible, e.g. consistency checking, wildcode checking, etc. Note that the information included here should be specific to the data file. Information about data processing checks that have been carried out on the data collection (study) as a whole should be provided in theData editingelement at the study level. You may also provide here a reference to an external resource that contains the specifications for the data processing checks (that same information may be provided also in theData Editingfiled in theStudy Descriptionsection).Missing data(missing_data) A description of missing data (number of missing cases, cause of missing values, etc.)Version(version) The version of the data file. A data file may undergo various changes and modifications. File specific versions can be tracked in this element. This field will in most cases be left empty.Notes(notes) This field aims to provide information on the specific data file not covered elsewhere.

Variables

The variable description section of the DDI Codebook standard provides you with the possibility to create a very rich data dictionary. While some of the variable-level metadata will be imported from the data files, the DDI Codebook contains many elements that can enrich the data dictionary. This additional information is very useful both for discoverability and for increased usability of the data.

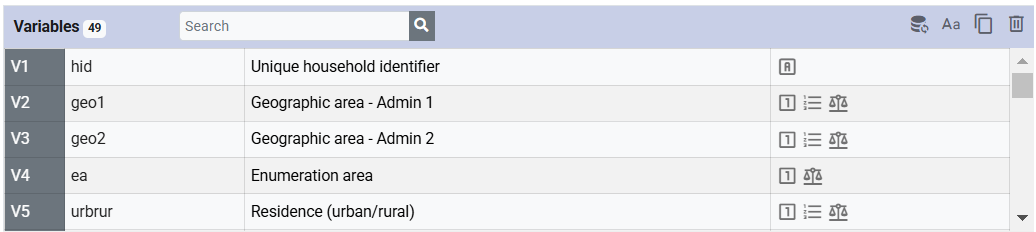

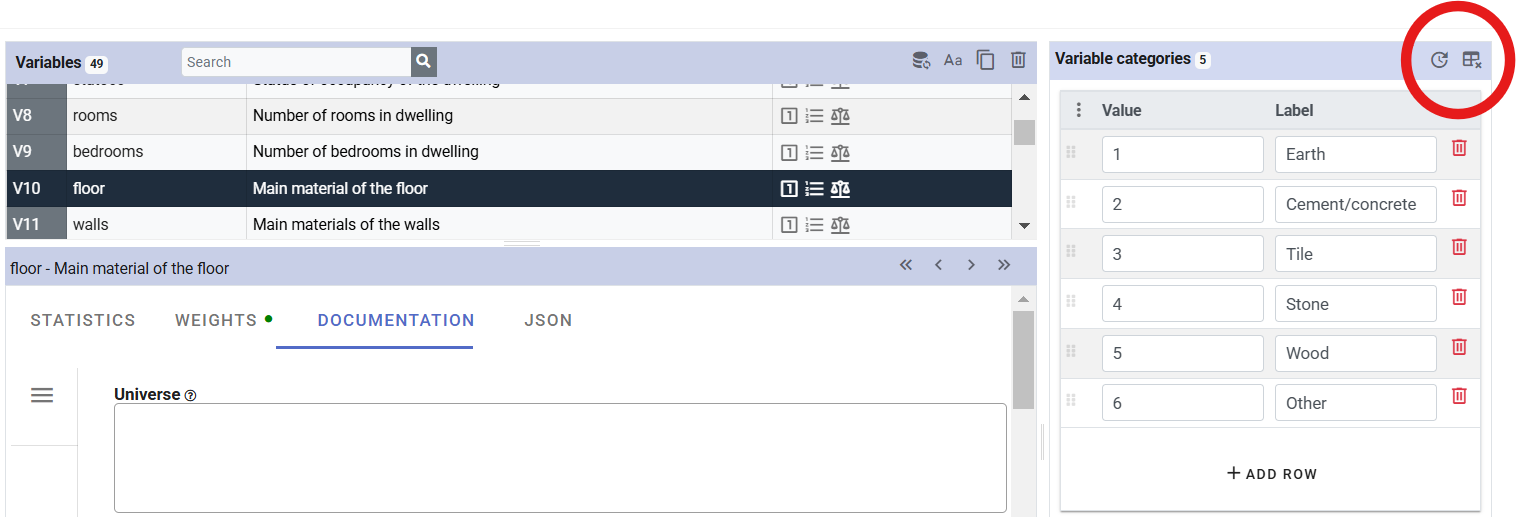

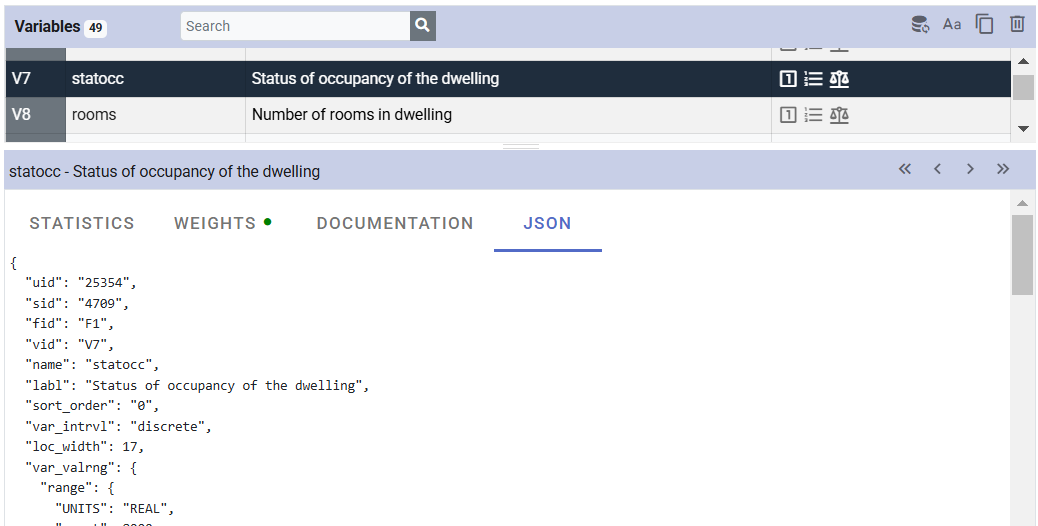

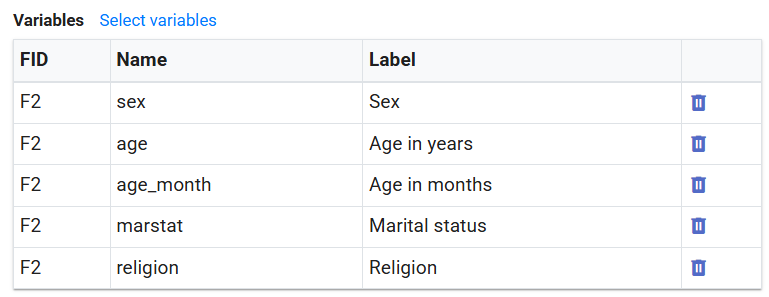

After importing a data file in the Metadata Editor, you can access information on the variables it contains by clicking on Variables below the file name in the navigation tree. This will open the Variables page, which contains four frames:

- Variables. Provides the list of variables in the data file, with their name, label, and status icons.

- Variable categories. Describes the categories (value labels) for a categorical variable selected in the list of variables.

- Variable description. Contains information on the status and type of the variable selected in the variable list, on its range, and on missing values.

- Statistics, weights, documentation, and JSON. Select the summary statistics to be included in the metadata, apply sampling weights if relevant, edit the documentation of the selected variable(s), and view the metadata in JSON format.

These four frames are described in detail below.

- Variable list

The variable list displays the list of variables in the selected data file, with their name and label. A search bar is provided to locate variables of interest (keyword search on variable name and label).

The variable labels can be edited (double click on a label to edit it). All variables should have a label that provides a short but clear indication of what the variable contains. Ideally, all variables in a data file will have a different label. File formats like Stata or SPSS often contain variable labels. Variable labels can also be found in data dictionaries in software applications like Survey Solutions or CsPro. Avoid using the question itself as a label (specific elements are available to capture the literal question text; see below). Think of a label as what you would want to see in a tabulation of the variables. Keep in mind that software applications like Stata and others impose a limit to the number of characters in a label (often, 80).

The variable names cannot be edited.

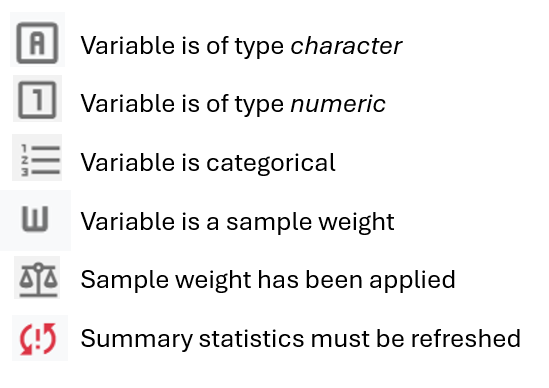

A set of icons provides information on the status of each variable, as follows:

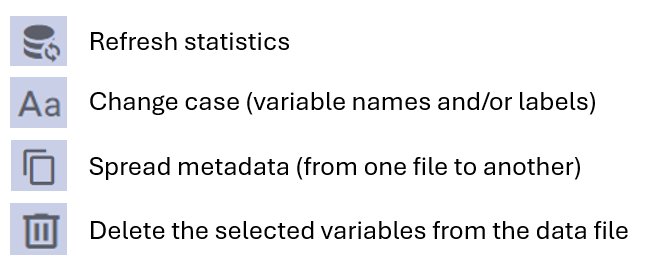

The icons shown on top of the variable list perform the following actions on variables:

Refresh statistics: Instructs the Metadata Editor to re-read the data files and update the summary statistics for all variables. You will want to refresh the summary statistics after performing actions like applying sample weights or modifying the value labels (categories) of some variables. Note that this will not be possible if you have cleared the data from the server.

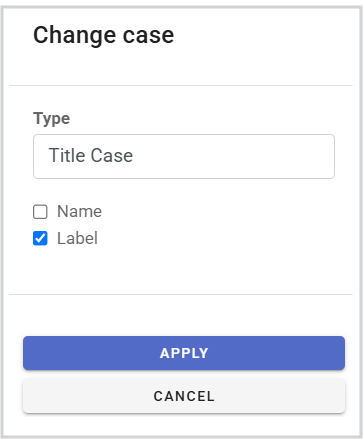

Change case: This allows you to change the case of the variable names and/or variable labels (changing them to uppercase, lowercase, or sentence case). Note that changing variable names is not always a good idea; some software like Stata or R are case sensitive; changing the case of a variable name is equivalent to changing the variable name, which means that some scripts written to run on the original data will not run anymore on data with renamed variables.

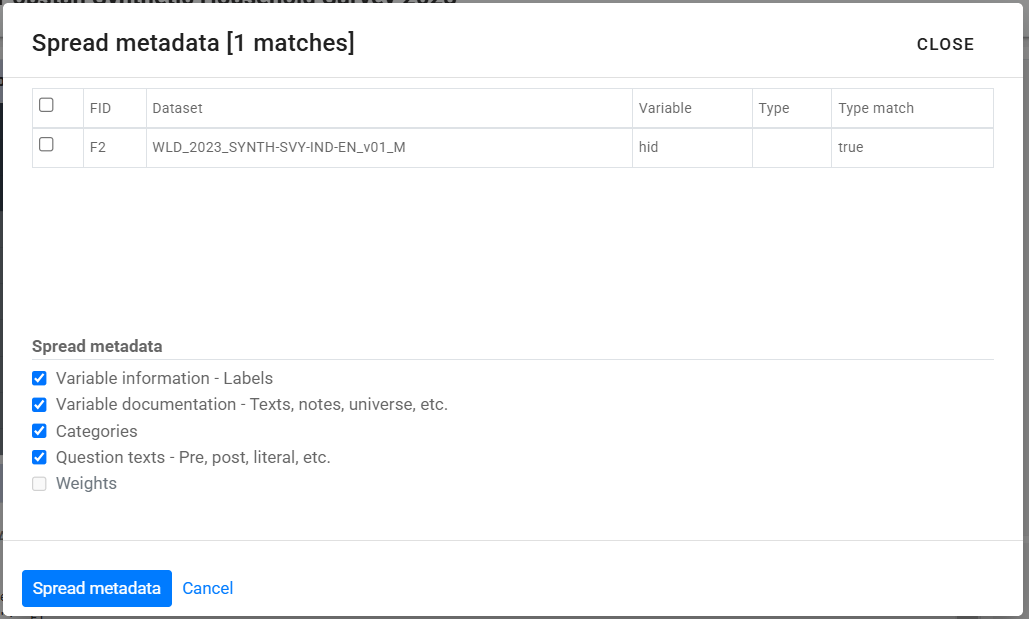

Spread metadata: In many micro-datasets, a small number of variables will be common to all data files. For example, in a sample household survey, the household identifier, and the variables that indicate the geographic location of the household, may be repeated in all files. In such case, you can document the variables in one data file, select these common variables, and "spread metadata" to automatically apply the metadata to variables with the same name in other data files.

Delete selection: The Metadata Editor is not a data editor; data files cannot be modified, with the exception that variables can be deleted from a data file. This option is provided to drop variables like temporary variables or direct identifiers that may have been accidentally left in the data files. To drop variables, select them in the variable list (using the Shift and Ctrl key as useful to select multiple variables), then click on the Delete selection icon.

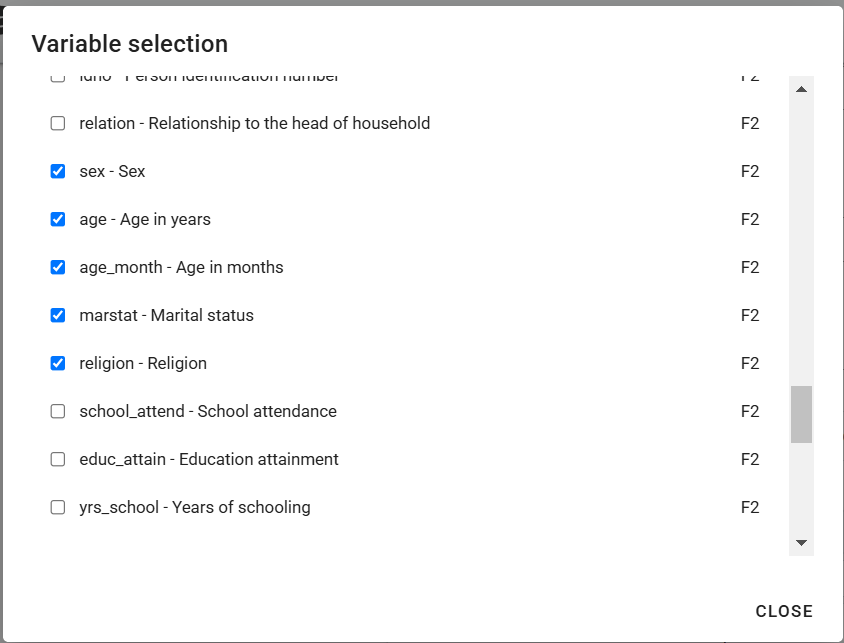

Variable categories (value labels)

When the data are imported, the Metadata Editor makes a best guess about their type. If a variable is identified as categorical, the Variable categories will be automatically populated with codes and value labels. These codes and labels can be edited in the Variable categories frame. Codes can also be added if necessary.

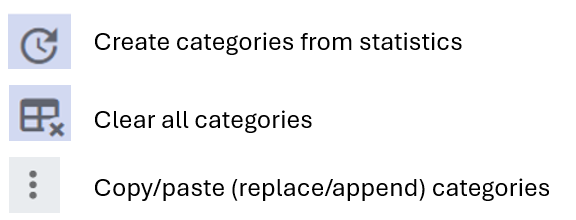

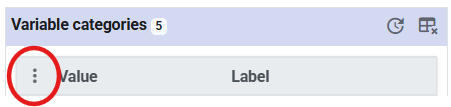

The Variable categories frame contains the following icon toolbar:

If a categorical variable was not identified as such when the data were imported, the Metadata Editor provides an option to automatically Create categories. This option is accessed by clicking on the Create categories icon at the top of the frame. The application will then extract a list of codes found for the variables in the data, and pre-populate the list of categories with the codes. The labels corresponding to each category can then be added by the data curator. An option (Clear all icon) is also provided to remove all content from the list of categories.

A category can be removed from the list by clicking on the trash icon.

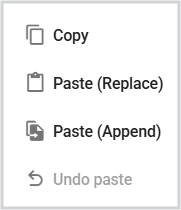

An option is also provided to copy/paste content in the list of value labels. This allows for example to copy code lists from an application like MS-Excel and to paste them in the Variable categories grid. The pasted information can either replace existing content of the grid (if any), or be appended to it.

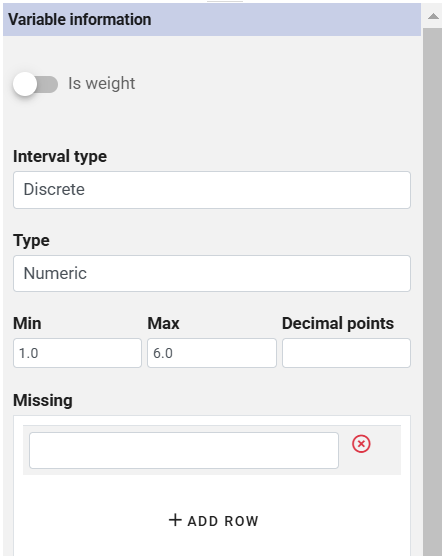

- Variable information

The Variable information frame provides options to tag a variable as being a sample weight, to edit the type of the variable, the range, and the format of the data it contains, and to inform the application of values to be treated as missing values.

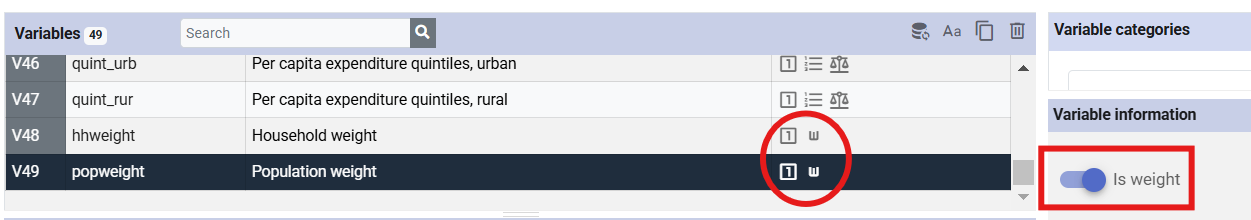

- Is weight The Is weigh toggle is used to tag variables that are sample weights, if any.

Interval type For numeric variables, the interval type can be set to Continuous or Discrete. This will impact how the data are documented and what summary statistics are produced (frequencies will not be calculated for continuous variables; variable categories will only be created for discrete variables). When data are imported, the application makes a best guess about the interval type. the data curator should browse through all variables to ensure the interval type has been properly set.

Type This option informs the system of the format type of the variable, with options numeric, character (string variables), and fixed.

Min, Max, and Decimal point A range of valid values can be entered in this element; values outside the range will trigger a validation error.

Missing The proper use of data requires proper treatment of missing values. The application (and data users) needs to know what values in the data files are to be considered as missing. When data are imported from Stata or SPSS, if the system missing values were used in the origial files, the missing values will be showns as . But other values may represent missing values. In some survey datasets, the code 99 for example may be used to represent "unknown or unreported age. When such values (other than system-missing) are used, they must be indicated as representing missing data, otherwise the summary statistics will be incorrect (e.g., 99 would be treated as a valid age eventhough it means "unreported"). One or multiple values representing missing can be entered for the variable.

Variable documentation

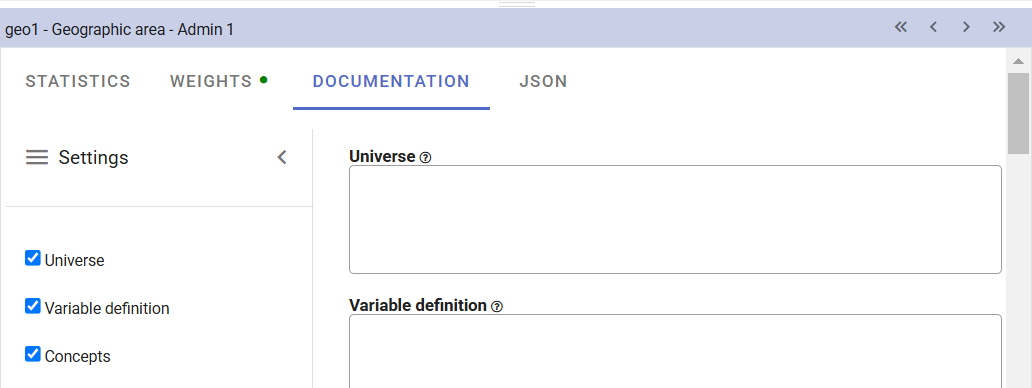

The last frame of the Variable page is one where most of the additional metadata related to variables will be entered.

Header bar The header bar of the frame shows the name and label of the variable selected in the Variable list. It also shows four icons, which allow you browse variables without having to select them in the Variable list.

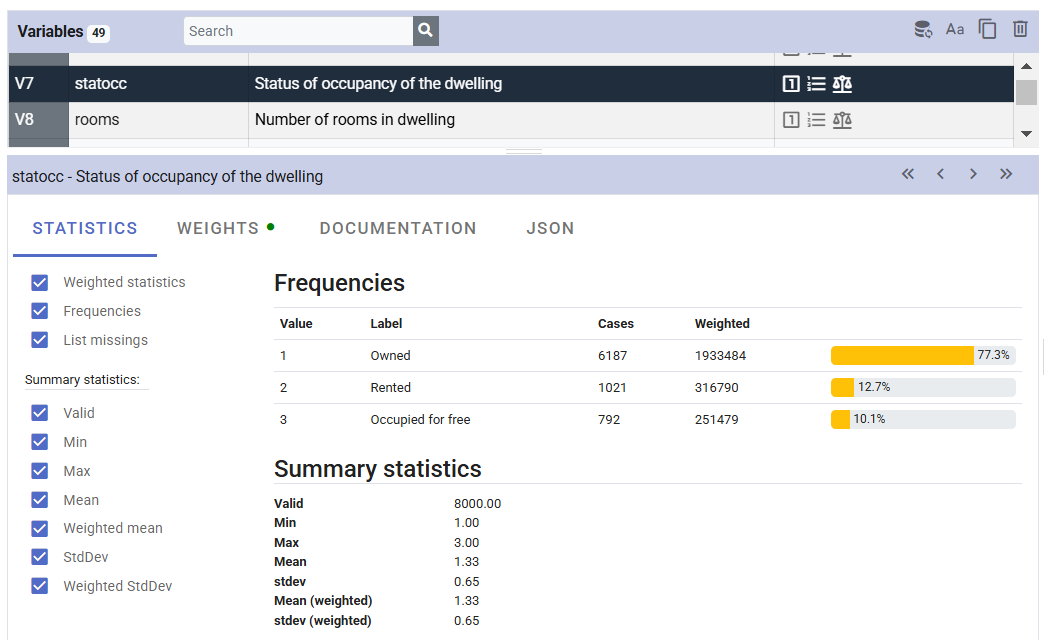

Statistics

The STATISTICS tab shows the summary statistics generated by the application when the data are imported (or when the statistics are refreshed). If you have applied weighting coefficients (see Weights below), the summary statistics will include both weighted and unweighted statistics. Otherwise, the statistics are unweighted. Keep in mind to properly document values that should be interpreted as missing values, to ensure that statistics like means and standard deviations are valid (see Missing above).

The option is offered in this tab to select the summary statistics to be included in the metadata. Uncheck the statistics that you do not want to store in the metadata. For example, you do not want to keep statistics like mean or standard deviation for categorical variables. Only keep statistics that are meaningful. Note that you may select a group of variables in the Variable list and apply a selection to all selected variables at once.

Note that summary statistics that will be stored in the metadata are statistics for each variable taken independently. No cross tabulation is possible.

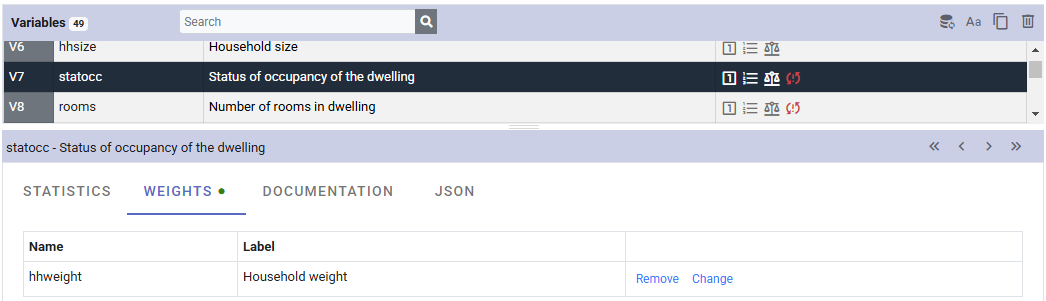

Weights