Model - Scikit-Learn#

Imports#

import os

import pandas as pd

from iqual import iqualnlp, evaluation, crossval

Load annotated (human-coded) and unannotated datasets#

data_dir = "../../data"

human_coded_df = pd.read_csv(os.path.join(data_dir,"annotated.csv"))

uncoded_df = pd.read_csv(os.path.join(data_dir,"unannotated.csv"))

Split the data into training and test sets#

from sklearn.model_selection import train_test_split

train_df, test_df = train_test_split(human_coded_df,test_size=0.25)

print(f"Train Size: {len(train_df)}\nTest Size: {len(test_df)}")

Train Size: 7470

Test Size: 2490

Configure training data#

### Select Question and Answer Columns

question_col = 'Q_en'

answer_col = 'A_en'

### Select a code

code_variable = 'marriage'

### Create X and y

X = train_df[[question_col,answer_col]]

y = train_df[code_variable]

Initiate model#

# Step 1: Initiate the model class

iqual_model = iqualnlp.Model()

# Step 2: Add layers to the model

# Add text columns, and choose a feature extraction model (Available options: scikit-learn, spacy, sentence-transformers, precomputed (picklized dictionary))

iqual_model.add_text_features(question_col,answer_col,model='TfidfVectorizer')

# Step 3: Add a feature transforming layer (optional)

# A. Choose a feature-scaler. Available options:

# any scikit-learn scaler from `sklearn.preprocessing`

# iqual_model.add_feature_transformer(name='Normalizer', transformation="FeatureScaler")

# OR

# B. Choose a dimensionality reduction model. Available options:

# - Any scikit-learn dimensionality reduction model from `sklearn.decomposition`

# - Uniform Manifold Approximation and Projection (UMAP) using umap.UMAP (https://umap-learn.readthedocs.io/en/latest/)

### iqual_model.add_feature_transformer(name='TruncatedSVD', transformation="DimensionalityReduction")

# Step 4: Add a classifier layer

# Choose a primary classifier model (Available options: any scikit-learn classifier)

iqual_model.add_classifier(name = "LogisticRegression")

# Step 5: Add a threshold layer. This is optional, but recommended for binary classification

iqual_model.add_threshold(scoring_metric='f1')

# Step 6: Compile the model

iqual_model.compile()

Pipeline(steps=[('Input',

FeatureUnion(transformer_list=[('question',

Pipeline(steps=[('selector',

FunctionTransformer(func=<function column_selector at 0x000002B49C2C9820>,

kw_args={'column_name': 'Q_en'})),

('vectorizer',

Vectorizer(analyzer='word',

binary=False,

decode_error='strict',

dtype=<class 'numpy.float64'>,

encoding='utf-8',

env='scikit-learn',

input='co...

tokenizer=None,

use_idf=True,

vocabulary=None))]))])),

('Classifier',

Classifier(C=1.0, class_weight=None, dual=False,

fit_intercept=True, intercept_scaling=1,

l1_ratio=None, max_iter=100,

model='LogisticRegression', multi_class='auto',

n_jobs=None, penalty='l2', random_state=None,

solver='lbfgs', tol=0.0001, verbose=0,

warm_start=False)),

('Threshold', BinaryThresholder())])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('Input',

FeatureUnion(transformer_list=[('question',

Pipeline(steps=[('selector',

FunctionTransformer(func=<function column_selector at 0x000002B49C2C9820>,

kw_args={'column_name': 'Q_en'})),

('vectorizer',

Vectorizer(analyzer='word',

binary=False,

decode_error='strict',

dtype=<class 'numpy.float64'>,

encoding='utf-8',

env='scikit-learn',

input='co...

tokenizer=None,

use_idf=True,

vocabulary=None))]))])),

('Classifier',

Classifier(C=1.0, class_weight=None, dual=False,

fit_intercept=True, intercept_scaling=1,

l1_ratio=None, max_iter=100,

model='LogisticRegression', multi_class='auto',

n_jobs=None, penalty='l2', random_state=None,

solver='lbfgs', tol=0.0001, verbose=0,

warm_start=False)),

('Threshold', BinaryThresholder())])FeatureUnion(transformer_list=[('question',

Pipeline(steps=[('selector',

FunctionTransformer(func=<function column_selector at 0x000002B49C2C9820>,

kw_args={'column_name': 'Q_en'})),

('vectorizer',

Vectorizer(analyzer='word',

binary=False,

decode_error='strict',

dtype=<class 'numpy.float64'>,

encoding='utf-8',

env='scikit-learn',

input='content',

lowercase=True,

max...

dtype=<class 'numpy.float64'>,

encoding='utf-8',

env='scikit-learn',

input='content',

lowercase=True,

max_df=1.0,

max_features=None,

min_df=1,

model='TfidfVectorizer',

ngram_range=(1, 1),

norm='l2',

preprocessor=None,

smooth_idf=True,

stop_words=None,

strip_accents=None,

sublinear_tf=False,

token_pattern='(?u)\\b\\w\\w+\\b',

tokenizer=None,

use_idf=True,

vocabulary=None))]))])FunctionTransformer(func=<function column_selector at 0x000002B49C2C9820>,

kw_args={'column_name': 'Q_en'})Vectorizer(analyzer='word', binary=False, decode_error='strict',

dtype=<class 'numpy.float64'>, encoding='utf-8', env='scikit-learn',

input='content', lowercase=True, max_df=1.0, max_features=None,

min_df=1, model='TfidfVectorizer', ngram_range=(1, 1), norm='l2',

preprocessor=None, smooth_idf=True, stop_words=None,

strip_accents=None, sublinear_tf=False,

token_pattern='(?u)\\b\\w\\w+\\b', tokenizer=None, use_idf=True,

vocabulary=None)FunctionTransformer(func=<function column_selector at 0x000002B49C2C9820>,

kw_args={'column_name': 'A_en'})Vectorizer(analyzer='word', binary=False, decode_error='strict',

dtype=<class 'numpy.float64'>, encoding='utf-8', env='scikit-learn',

input='content', lowercase=True, max_df=1.0, max_features=None,

min_df=1, model='TfidfVectorizer', ngram_range=(1, 1), norm='l2',

preprocessor=None, smooth_idf=True, stop_words=None,

strip_accents=None, sublinear_tf=False,

token_pattern='(?u)\\b\\w\\w+\\b', tokenizer=None, use_idf=True,

vocabulary=None)Classifier(C=1.0, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, l1_ratio=None, max_iter=100,

model='LogisticRegression', multi_class='auto', n_jobs=None,

penalty='l2', random_state=None, solver='lbfgs', tol=0.0001,

verbose=0, warm_start=False)BinaryThresholder()

Configure a Hyperparameter Grid for cross-validation + fitting#

search_param_config = {

"Input":{

"question":{

"vectorizer":{

"model":["TfidfVectorizer"],

"env":["scikit-learn"],

"max_features":[1000,2000],

"ngram_range":[(1,2)],

},

},

"answer":{

"vectorizer":{

"model":["TfidfVectorizer"],

"env":["scikit-learn"],

"max_features":[1000,2500,4000,6000],

"ngram_range":[(1,2)],

},

},

},

"Classifier":{

"model":["LogisticRegression"],

"C":[0.001,0.01, 0.1, 1.1,],

},

}

CV_SEARCH_PARAMS = crossval.convert_nested_params(search_param_config)

Model training:#

Cross-validate over hyperparameters and select the best model

# Scoring Dict for evaluation

scoring_dict = {'f1':evaluation.get_scorer('f1')}

cv_dict = iqual_model.cross_validate_fit(

X,y, # X: Pandas DataFrame of features, y: Pandas Series of labels

search_parameters=CV_SEARCH_PARAMS, # search_parameters: Dictionary of parameters to use for cross-validation

cv_method='GridSearchCV', # cv_method: Cross-validation method to use, options: GridSearchCV, RandomizedSearchCV

scoring=scoring_dict, # scoring: Scoring metric to use for cross-validation

refit='f1', # refit: Metric to use for refitting the model

n_jobs=-1, # n_jobs: Number of parallel threads to use

cv_splits=3, # cv_splits: Number of cross-validation splits

)

print()

print("Average F1 score: {:.3f}".format(cv_dict['avg_test_score']))

.......32 hyperparameters configurations possible.....

Average F1 score: 0.759

Evaluate model using out sample data (Held out human-coded data)#

test_X = test_df[['Q_en','A_en']]

test_y = test_df[code_variable]

f1_metric = evaluation.get_metric('f1_score')

f1_score = iqual_model.score(test_X,test_y,scoring_function=f1_metric)

print(f"Out-sample F1-score: {f1_score:.3f}")

Out-sample F1-score: 0.822

Predict labels for unannotated data#

uncoded_df[code_variable+'_pred'] = iqual_model.predict(uncoded_df[['Q_en','A_en']])

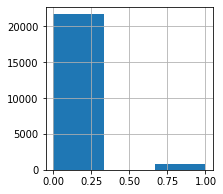

uncoded_df[code_variable+"_pred"].hist(figsize=(3,3),bins=3)

<AxesSubplot:>

Examples for positive predictions#

for idx, row in uncoded_df.loc[(uncoded_df[code_variable+"_pred"]==1),['Q_en','A_en']].sample(3).iterrows():

print("Q: ",row['Q_en'],"\n","A: ", row['A_en'],sep='')

print()

Q: Sister, tell us the dreams and hopes you have about your children.

A: You, girls are 5. I will educate the girls. If I get married, I will give it, if I don't get married, I will study more. And 2 sons. He has a dream to do something big by educating his eldest son. If you can't afford it, do business like father. The little boy is still little. I can't talk about him. Want to do the same to both.

Q: So what else do you hope for your son except for you to study?

A: What else is the hope that my eldest child is a girl, so when she grows up, I will marry her. If I had money, I could study well. Now we have to get married. Even then, there is no money, how can I meet the dowry that I want now?

Q: What dreams do you all have about your children? said

A: My dream is that my daughter will study, get a good family and get married. And I gave my son in business, he will run the family by doing business. This is more. Will work hard to run the family. If you don't work, will the money come?