Model - Multiple Classifiers#

Imports#

import os

import pandas as pd

from iqual import iqualnlp, evaluation, crossval

Load annotated (human-coded) and unannotated datasets#

data_dir = "../../data"

human_coded_df = pd.read_csv(os.path.join(data_dir,"annotated.csv"))

uncoded_df = pd.read_csv(os.path.join(data_dir,"unannotated.csv"))

Split the data into training and test sets#

from sklearn.model_selection import train_test_split

train_df, test_df = train_test_split(human_coded_df,test_size=0.25)

print(f"Train Size: {len(train_df)}\nTest Size: {len(test_df)}")

Train Size: 7470

Test Size: 2490

Configure training data#

### Select Question and Answer Columns

question_col = 'Q_en'

answer_col = 'A_en'

### Select a code

code_variable = 'marriage'

### Create X and y

X = train_df[[question_col,answer_col]]

y = train_df[code_variable]

Initiate model#

# Step 1: Initiate the model class

iqual_model = iqualnlp.Model()

# Step 2: Add layers to the model

# Add text columns, and choose a feature extraction model (Available options: scikit-learn, spacy, sentence-transformers, saved-dictionary (picklized dictionary))

iqual_model.add_text_features(question_col,answer_col,model='TfidfVectorizer',env='scikit-learn')

# Step 3: Add a feature transforming layer (optional)

# A. Choose a feature-scaler. Available options:

# any scikit-learn scaler from `sklearn.preprocessing`

### iqual_model.add_feature_transformer(name='StandardScaler', transformation="FeatureScaler")

# OR

# B. Choose a dimensionality reduction model. Available options:

# - Any scikit-learn dimensionality reduction model from `sklearn.decomposition`

# - Uniform Manifold Approximation and Projection (UMAP) using umap.UMAP (https://umap-learn.readthedocs.io/en/latest/)

### iqual_model.add_feature_transformer(name='PCA', transformation="DimensionalityReduction")

# Step 4: Add a classifier layer

# Choose a primary classifier model (Available options: any scikit-learn classifier)

iqual_model.add_classifier(name="LogisticRegression")

# Step 5: Add a threshold layer. This is optional, but recommended for binary classification

iqual_model.add_threshold(scoring_metric='f1')

# Step 6: Compile the model

iqual_model.compile()

Pipeline(steps=[('Input',

FeatureUnion(transformer_list=[('question',

Pipeline(steps=[('selector',

FunctionTransformer(func=<function column_selector at 0x000001CD458EE820>,

kw_args={'column_name': 'Q_en'})),

('vectorizer',

Vectorizer(analyzer='word',

binary=False,

decode_error='strict',

dtype=<class 'numpy.float64'>,

encoding='utf-8',

env='scikit-learn',

input='co...

tokenizer=None,

use_idf=True,

vocabulary=None))]))])),

('Classifier',

Classifier(C=1.0, class_weight=None, dual=False,

fit_intercept=True, intercept_scaling=1,

l1_ratio=None, max_iter=100,

model='LogisticRegression', multi_class='auto',

n_jobs=None, penalty='l2', random_state=None,

solver='lbfgs', tol=0.0001, verbose=0,

warm_start=False)),

('Threshold', BinaryThresholder())])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('Input',

FeatureUnion(transformer_list=[('question',

Pipeline(steps=[('selector',

FunctionTransformer(func=<function column_selector at 0x000001CD458EE820>,

kw_args={'column_name': 'Q_en'})),

('vectorizer',

Vectorizer(analyzer='word',

binary=False,

decode_error='strict',

dtype=<class 'numpy.float64'>,

encoding='utf-8',

env='scikit-learn',

input='co...

tokenizer=None,

use_idf=True,

vocabulary=None))]))])),

('Classifier',

Classifier(C=1.0, class_weight=None, dual=False,

fit_intercept=True, intercept_scaling=1,

l1_ratio=None, max_iter=100,

model='LogisticRegression', multi_class='auto',

n_jobs=None, penalty='l2', random_state=None,

solver='lbfgs', tol=0.0001, verbose=0,

warm_start=False)),

('Threshold', BinaryThresholder())])FeatureUnion(transformer_list=[('question',

Pipeline(steps=[('selector',

FunctionTransformer(func=<function column_selector at 0x000001CD458EE820>,

kw_args={'column_name': 'Q_en'})),

('vectorizer',

Vectorizer(analyzer='word',

binary=False,

decode_error='strict',

dtype=<class 'numpy.float64'>,

encoding='utf-8',

env='scikit-learn',

input='content',

lowercase=True,

max...

dtype=<class 'numpy.float64'>,

encoding='utf-8',

env='scikit-learn',

input='content',

lowercase=True,

max_df=1.0,

max_features=None,

min_df=1,

model='TfidfVectorizer',

ngram_range=(1, 1),

norm='l2',

preprocessor=None,

smooth_idf=True,

stop_words=None,

strip_accents=None,

sublinear_tf=False,

token_pattern='(?u)\\b\\w\\w+\\b',

tokenizer=None,

use_idf=True,

vocabulary=None))]))])FunctionTransformer(func=<function column_selector at 0x000001CD458EE820>,

kw_args={'column_name': 'Q_en'})Vectorizer(analyzer='word', binary=False, decode_error='strict',

dtype=<class 'numpy.float64'>, encoding='utf-8', env='scikit-learn',

input='content', lowercase=True, max_df=1.0, max_features=None,

min_df=1, model='TfidfVectorizer', ngram_range=(1, 1), norm='l2',

preprocessor=None, smooth_idf=True, stop_words=None,

strip_accents=None, sublinear_tf=False,

token_pattern='(?u)\\b\\w\\w+\\b', tokenizer=None, use_idf=True,

vocabulary=None)FunctionTransformer(func=<function column_selector at 0x000001CD458EE820>,

kw_args={'column_name': 'A_en'})Vectorizer(analyzer='word', binary=False, decode_error='strict',

dtype=<class 'numpy.float64'>, encoding='utf-8', env='scikit-learn',

input='content', lowercase=True, max_df=1.0, max_features=None,

min_df=1, model='TfidfVectorizer', ngram_range=(1, 1), norm='l2',

preprocessor=None, smooth_idf=True, stop_words=None,

strip_accents=None, sublinear_tf=False,

token_pattern='(?u)\\b\\w\\w+\\b', tokenizer=None, use_idf=True,

vocabulary=None)Classifier(C=1.0, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, l1_ratio=None, max_iter=100,

model='LogisticRegression', multi_class='auto', n_jobs=None,

penalty='l2', random_state=None, solver='lbfgs', tol=0.0001,

verbose=0, warm_start=False)BinaryThresholder()

Configure a Hyperparameter Grid for cross-validation + fitting#

## Paths for precomputed vectors created using `sentence-transformers`

dict_dir = "../../dictionaries"

sbert_models = ["all-mpnet-base-v2", "distiluse-base-multilingual-cased-v2"]

sbert_model_paths = [os.path.join(dict_dir,m+'.pkl') for m in sbert_models]

SBERT_QA_PARAMS = {

"Input":{

"question":{

"vectorizer":{

"model":sbert_model_paths,

"env":["saved-dictionary"],

},

},

"answer":{

"vectorizer":{

"model":sbert_model_paths,

"env":["saved-dictionary"],

},

},

}

}

SBERT_A_PARAMS = {

"Input":{

"question":["drop"],

"answer":{

"vectorizer":{

"model":sbert_model_paths,

"env":["saved-dictionary"],

},

}

}

}

SKLEARN_QA_PARAMS = {

"Input":{

"question":{

"vectorizer":{

"model":['TfidfVectorizer','CountVectorizer'],

"max_features":[500,1000,1500,2500,],

"env":["scikit-learn"],

},

},

"answer":{

"vectorizer":{

"model":['TfidfVectorizer','CountVectorizer'],

"max_features":[1500,2500,4000,],

"env":["scikit-learn"],

},

},

}

}

SKLEARN_A_PARAMS = {

"Input":{

"question":["drop"],

"answer":{

"vectorizer":{

"model":['TfidfVectorizer','CountVectorizer'],

"max_features":[1500,2500,4000,],

"env":["scikit-learn"],

},

}

}

}

LOGISTIC_PARAMS = {

"Classifier":{

"model":["LogisticRegression"],

"C":[0.01,0.1],

},

}

RANDOM_FOREST_PARAMS = {

"Classifier":{

"model":["RandomForestClassifier"],

"n_estimators":[100,200],

"max_depth":[5,10,15],

},

}

SGD_PARAMS = {

"Classifier":{

"model":["SGDClassifier"],

"loss":["hinge","log"],

"alpha":[0.0001,0.001],

},

}

### Combine a Vectorizer and Classifier

VECTORIZATION_PARAMS = [SKLEARN_QA_PARAMS,SKLEARN_A_PARAMS,SBERT_QA_PARAMS,SBERT_A_PARAMS]

CLASSIFIER_PARAMS = [LOGISTIC_PARAMS,RANDOM_FOREST_PARAMS,SGD_PARAMS]

params_all = [{**vect_params, **clf_params} for vect_params in VECTORIZATION_PARAMS for clf_params in CLASSIFIER_PARAMS]

CV_SEARCH_PARAMS = [crossval.convert_nested_params(params) for params in params_all]

print(CV_SEARCH_PARAMS)

[{'Input__question__vectorizer__model': ['TfidfVectorizer', 'CountVectorizer'], 'Input__question__vectorizer__max_features': [500, 1000, 1500, 2500], 'Input__question__vectorizer__env': ['scikit-learn'], 'Input__answer__vectorizer__model': ['TfidfVectorizer', 'CountVectorizer'], 'Input__answer__vectorizer__max_features': [1500, 2500, 4000], 'Input__answer__vectorizer__env': ['scikit-learn'], 'Classifier__model': ['LogisticRegression'], 'Classifier__C': [0.01, 0.1]}, {'Input__question__vectorizer__model': ['TfidfVectorizer', 'CountVectorizer'], 'Input__question__vectorizer__max_features': [500, 1000, 1500, 2500], 'Input__question__vectorizer__env': ['scikit-learn'], 'Input__answer__vectorizer__model': ['TfidfVectorizer', 'CountVectorizer'], 'Input__answer__vectorizer__max_features': [1500, 2500, 4000], 'Input__answer__vectorizer__env': ['scikit-learn'], 'Classifier__model': ['RandomForestClassifier'], 'Classifier__n_estimators': [100, 200], 'Classifier__max_depth': [5, 10, 15]}, {'Input__question__vectorizer__model': ['TfidfVectorizer', 'CountVectorizer'], 'Input__question__vectorizer__max_features': [500, 1000, 1500, 2500], 'Input__question__vectorizer__env': ['scikit-learn'], 'Input__answer__vectorizer__model': ['TfidfVectorizer', 'CountVectorizer'], 'Input__answer__vectorizer__max_features': [1500, 2500, 4000], 'Input__answer__vectorizer__env': ['scikit-learn'], 'Classifier__model': ['SGDClassifier'], 'Classifier__loss': ['hinge', 'log'], 'Classifier__alpha': [0.0001, 0.001]}, {'Input__question': ['drop'], 'Input__answer__vectorizer__model': ['TfidfVectorizer', 'CountVectorizer'], 'Input__answer__vectorizer__max_features': [1500, 2500, 4000], 'Input__answer__vectorizer__env': ['scikit-learn'], 'Classifier__model': ['LogisticRegression'], 'Classifier__C': [0.01, 0.1]}, {'Input__question': ['drop'], 'Input__answer__vectorizer__model': ['TfidfVectorizer', 'CountVectorizer'], 'Input__answer__vectorizer__max_features': [1500, 2500, 4000], 'Input__answer__vectorizer__env': ['scikit-learn'], 'Classifier__model': ['RandomForestClassifier'], 'Classifier__n_estimators': [100, 200], 'Classifier__max_depth': [5, 10, 15]}, {'Input__question': ['drop'], 'Input__answer__vectorizer__model': ['TfidfVectorizer', 'CountVectorizer'], 'Input__answer__vectorizer__max_features': [1500, 2500, 4000], 'Input__answer__vectorizer__env': ['scikit-learn'], 'Classifier__model': ['SGDClassifier'], 'Classifier__loss': ['hinge', 'log'], 'Classifier__alpha': [0.0001, 0.001]}, {'Input__question__vectorizer__model': ['../../dictionaries\\all-mpnet-base-v2.pkl', '../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl'], 'Input__question__vectorizer__env': ['saved-dictionary'], 'Input__answer__vectorizer__model': ['../../dictionaries\\all-mpnet-base-v2.pkl', '../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl'], 'Input__answer__vectorizer__env': ['saved-dictionary'], 'Classifier__model': ['LogisticRegression'], 'Classifier__C': [0.01, 0.1]}, {'Input__question__vectorizer__model': ['../../dictionaries\\all-mpnet-base-v2.pkl', '../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl'], 'Input__question__vectorizer__env': ['saved-dictionary'], 'Input__answer__vectorizer__model': ['../../dictionaries\\all-mpnet-base-v2.pkl', '../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl'], 'Input__answer__vectorizer__env': ['saved-dictionary'], 'Classifier__model': ['RandomForestClassifier'], 'Classifier__n_estimators': [100, 200], 'Classifier__max_depth': [5, 10, 15]}, {'Input__question__vectorizer__model': ['../../dictionaries\\all-mpnet-base-v2.pkl', '../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl'], 'Input__question__vectorizer__env': ['saved-dictionary'], 'Input__answer__vectorizer__model': ['../../dictionaries\\all-mpnet-base-v2.pkl', '../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl'], 'Input__answer__vectorizer__env': ['saved-dictionary'], 'Classifier__model': ['SGDClassifier'], 'Classifier__loss': ['hinge', 'log'], 'Classifier__alpha': [0.0001, 0.001]}, {'Input__question': ['drop'], 'Input__answer__vectorizer__model': ['../../dictionaries\\all-mpnet-base-v2.pkl', '../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl'], 'Input__answer__vectorizer__env': ['saved-dictionary'], 'Classifier__model': ['LogisticRegression'], 'Classifier__C': [0.01, 0.1]}, {'Input__question': ['drop'], 'Input__answer__vectorizer__model': ['../../dictionaries\\all-mpnet-base-v2.pkl', '../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl'], 'Input__answer__vectorizer__env': ['saved-dictionary'], 'Classifier__model': ['RandomForestClassifier'], 'Classifier__n_estimators': [100, 200], 'Classifier__max_depth': [5, 10, 15]}, {'Input__question': ['drop'], 'Input__answer__vectorizer__model': ['../../dictionaries\\all-mpnet-base-v2.pkl', '../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl'], 'Input__answer__vectorizer__env': ['saved-dictionary'], 'Classifier__model': ['SGDClassifier'], 'Classifier__loss': ['hinge', 'log'], 'Classifier__alpha': [0.0001, 0.001]}]

Model training#

Cross-validate over hyperparameters and select the best model

# Scoring Dict for evaluation

scoring_dict = {'f1':evaluation.get_scorer('f1')}

cv_dict = iqual_model.cross_validate_fit(

X,y, # X: Pandas DataFrame of features, y: Pandas Series of labels

search_parameters=CV_SEARCH_PARAMS, # search_parameters: Dictionary of parameters to use for cross-validation

cv_method='RandomizedSearchCV', # cv_method: Cross-validation method to use, options: GridSearchCV, RandomizedSearchCV

n_iter=30, # n_iter: Only when cv_method='RandomizedSearchCV'

scoring=scoring_dict, # scoring: Scoring metric to use for cross-validation

refit='f1', # refit: Metric to use for refitting the model

n_jobs=-1, # n_jobs: Number of parallel threads to use

cv_splits=3, # cv_splits: Number of cross-validation splits

)

print()

print("Average F1 score: {:.3f}".format(cv_dict['avg_test_score']))

.......720 hyperparameters configurations possible.....

Average F1 score: 0.843

Check Best Model#

params = iqual_model.get_params()

print(f"Q vectorizer: {params.get('Input__question__vectorizer__model','Dropped')}")

print(f"A vectorizer: {params.get('Input__answer__vectorizer__model')}")

print(f"Classifier: {params.get('Classifier__model')}")

print(f"Threshold for classification: {params.get('Threshold__threshold'):.3f}")

iqual_model.model

Q vectorizer: Dropped

A vectorizer: ../../dictionaries\distiluse-base-multilingual-cased-v2.pkl

Classifier: SGDClassifier

Threshold for classification: 0.377

Pipeline(steps=[('Input',

FeatureUnion(transformer_list=[('question', 'drop'),

('answer',

Pipeline(steps=[('selector',

FunctionTransformer(func=<function column_selector at 0x000001CD458EE820>,

kw_args={'column_name': 'A_en'})),

('vectorizer',

Vectorizer(env='saved-dictionary',

model='../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl'))]))])),

('Class...

fit_intercept=True, l1_ratio=0.15,

learning_rate='optimal', loss='hinge',

max_iter=1000, model='SGDClassifier',

n_iter_no_change=5, n_jobs=None, penalty='l2',

power_t=0.5, random_state=None, shuffle=True,

tol=0.001, validation_fraction=0.1, verbose=0,

warm_start=False)),

('Threshold',

BinaryThresholder(threshold=0.3765454917119333,

threshold_range=(0.08156779228110662,

0.8708324475149403)))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('Input',

FeatureUnion(transformer_list=[('question', 'drop'),

('answer',

Pipeline(steps=[('selector',

FunctionTransformer(func=<function column_selector at 0x000001CD458EE820>,

kw_args={'column_name': 'A_en'})),

('vectorizer',

Vectorizer(env='saved-dictionary',

model='../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl'))]))])),

('Class...

fit_intercept=True, l1_ratio=0.15,

learning_rate='optimal', loss='hinge',

max_iter=1000, model='SGDClassifier',

n_iter_no_change=5, n_jobs=None, penalty='l2',

power_t=0.5, random_state=None, shuffle=True,

tol=0.001, validation_fraction=0.1, verbose=0,

warm_start=False)),

('Threshold',

BinaryThresholder(threshold=0.3765454917119333,

threshold_range=(0.08156779228110662,

0.8708324475149403)))])FeatureUnion(transformer_list=[('question', 'drop'),

('answer',

Pipeline(steps=[('selector',

FunctionTransformer(func=<function column_selector at 0x000001CD458EE820>,

kw_args={'column_name': 'A_en'})),

('vectorizer',

Vectorizer(env='saved-dictionary',

model='../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl'))]))])drop

FunctionTransformer(func=<function column_selector at 0x000001CD458EE820>,

kw_args={'column_name': 'A_en'})Vectorizer(env='saved-dictionary',

model='../../dictionaries\\distiluse-base-multilingual-cased-v2.pkl')Classifier(alpha=0.001, average=False, class_weight=None, early_stopping=False,

epsilon=0.1, eta0=0.0, fit_intercept=True, l1_ratio=0.15,

learning_rate='optimal', loss='hinge', max_iter=1000,

model='SGDClassifier', n_iter_no_change=5, n_jobs=None, penalty='l2',

power_t=0.5, random_state=None, shuffle=True, tol=0.001,

validation_fraction=0.1, verbose=0, warm_start=False)BinaryThresholder(threshold=0.3765454917119333,

threshold_range=(0.08156779228110662, 0.8708324475149403))Evaluate model using out sample data (Held out human-coded data)#

test_X = test_df[['Q_en','A_en']]

test_y = test_df[code_variable]

f1_score = iqual_model.score(test_X,test_y,

scoring_function=evaluation.get_metric('f1_score'))

print(f"Out-sample F1-score: {f1_score:.3f}")

accuracy = iqual_model.score(test_X,test_y,

scoring_function=evaluation.get_metric('accuracy_score'))

print(f"Out-sample accuracy-score: {accuracy:.3f}")

Out-sample F1-score: 0.803

Out-sample accuracy-score: 0.980

Predict labels for unannotated data#

uncoded_df[code_variable+'_pred'] = iqual_model.predict(uncoded_df[['Q_en','A_en']])

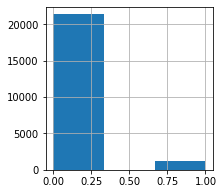

uncoded_df[code_variable+"_pred"].hist(figsize=(3,3),bins=3)

<AxesSubplot:>

Examples for positive predictions#

for idx, row in uncoded_df.loc[(uncoded_df[code_variable+"_pred"]==1),['Q_en','A_en']].sample(3).iterrows():

print("Q: ",row['Q_en'],"\n","A: ", row['A_en'],sep='')

print()

Q: Yes, younger sisters will be married. Are these?

A: Yes they are. As the sister is fit for marriage, the marriage should be given. There are many offers. I can't get married. still waiting

Q: What other dreams do you have about him without work?

A: When I grow up, I will marry you.

Q: It means marry.

A: Yes, I will marry. That's right.